After conducting greater than 500 in-depth web site audits up to now 12 years, I’ve seen clear patterns about what works and doesn’t in web optimization.

I’ve seen nearly all the things that may go proper – and unsuitable – with web sites of various sorts.

That will help you keep away from pricey web optimization errors, I’m sharing 11 sensible classes from important web optimization areas, equivalent to technical web optimization, on-page web optimization, content material technique, web optimization instruments and processes, and off-page web optimization.

It took me greater than a decade to find all these classes. By studying this text, you may apply these insights to avoid wasting your self and your web optimization purchasers time, cash, and frustration – in lower than an hour.

Lesson #1: Technical web optimization Is Your Basis For web optimization Success

- Lesson: You need to all the time begin any web optimization work with technical fundamentals; crawlability and indexability decide whether or not search engines like google and yahoo may even see your website.

Technical web optimization ensures search engines like google and yahoo can crawl, index, and totally perceive your content material. If search engines like google and yahoo can’t correctly entry your website, no quantity of high quality content material or backlinks will assist.

After auditing over 500 web sites, I consider technical web optimization is probably the most important side of web optimization, which comes down to 2 elementary ideas:

- Crawlability: Can search engines like google and yahoo simply discover and navigate your web site’s pages?

- Indexability: As soon as crawled, can your pages seem in search outcomes?

In case your pages fail these two exams, they received’t even enter the web optimization recreation — and your web optimization efforts received’t matter.

I strongly advocate frequently monitoring your technical web optimization well being utilizing not less than two important instruments: Google Search Console and Bing Webmaster Instruments.

When beginning any web optimization audit, all the time ask your self these two important questions:

- Can Google, Bing, or different search engines like google and yahoo crawl and index my vital pages?

- Am I letting search engine bots crawl solely the best pages?

This step alone can prevent enormous complications and guarantee no main technical web optimization blockages.

→ Learn extra: 13 Steps To Increase Your Web site’s Crawlability And Indexability

Lesson #2: JavaScript web optimization Can Simply Go Unsuitable

- Lesson: You ought to be cautious when relying closely on JavaScript. It might simply forestall Google from seeing and indexing important content material.

JavaScript provides nice interactivity, however search engines like google and yahoo (at the same time as good as Google) typically wrestle to course of it reliably.

Google handles JavaScript in three steps (crawling, rendering, and indexing) utilizing an evergreen Chromium browser. Nonetheless, rendering delays (from minutes to weeks) and restricted sources can forestall vital content material from getting listed.

I’ve audited many websites whose web optimization was failing as a result of key JavaScript-loaded content material wasn’t seen to Google.

Sometimes, vital content material was lacking from the preliminary HTML, it didn’t load correctly throughout rendering, or there have been vital variations between the uncooked HTML and rendered HTML when it got here to content material or meta components.

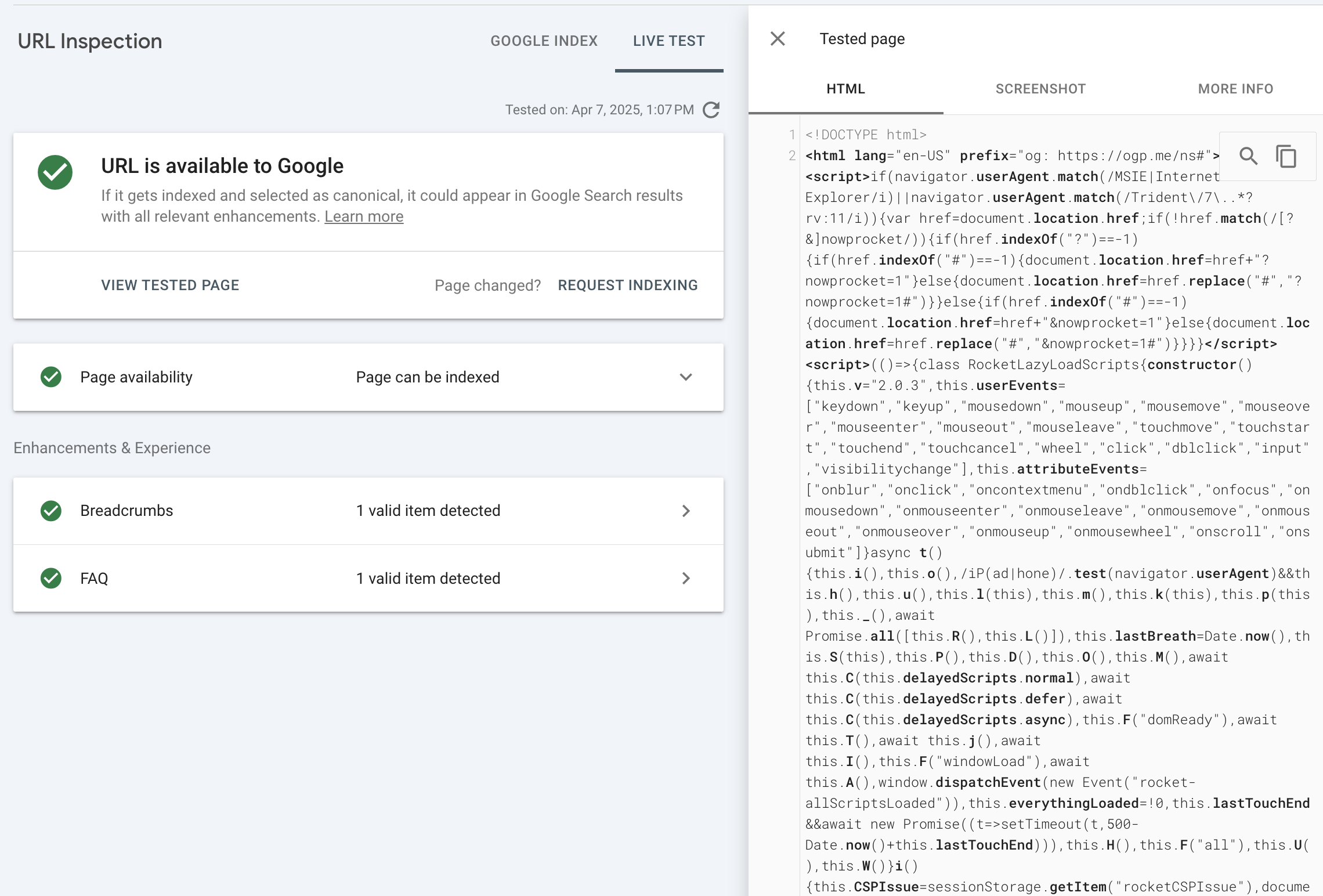

You need to all the time check if Google can see your JavaScript-based content material:

- Use the Stay URL Take a look at in Google Search Console and confirm rendered HTML.

Google Search Console LIVE Take a look at means that you can see the rendered HTML. (Screenshot from Google Search Console, April 2025)

Google Search Console LIVE Take a look at means that you can see the rendered HTML. (Screenshot from Google Search Console, April 2025)- Or, search Google for a singular sentence out of your JavaScript content material (in quotes). In case your content material isn’t displaying up, Google most likely can’t index it.*

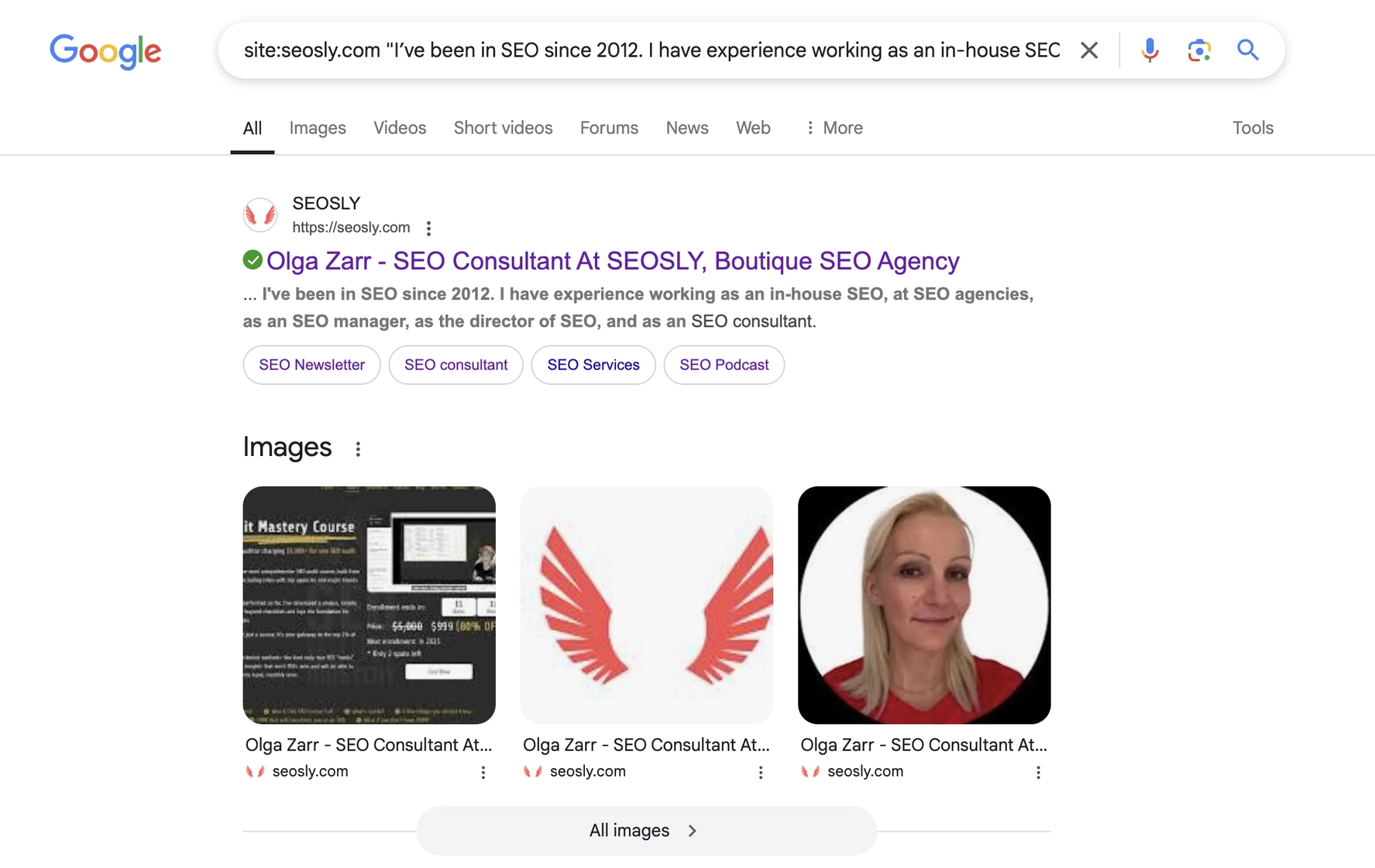

The location: search in Google means that you can shortly examine whether or not a given piece of textual content on a given web page is listed by Google. (Screenshot from Google Search, April 2025)

The location: search in Google means that you can shortly examine whether or not a given piece of textual content on a given web page is listed by Google. (Screenshot from Google Search, April 2025)*It will solely work for URLs which can be already in Google’s index.

Listed below are a couple of greatest practices relating to JavaScript web optimization:

- Essential content material in HTML: You need to embrace titles, descriptions, and vital content material instantly within the preliminary HTML so search engines like google and yahoo can index it instantly. You need to do not forget that Google doesn’t scroll or click on.

- Server-Facet Rendering (SSR): You need to think about implementing SSR to serve totally rendered HTML. It’s extra dependable and fewer resource-intensive for search engines like google and yahoo.

- Correct robots.txt setup: Web sites ought to block important JavaScript information wanted for rendering, as this prevents indexing.

- Use crawlable URLs: You need to guarantee every web page has a singular, crawlable URL. You also needs to keep away from URL fragments (#part) for vital content material; they typically don’t get listed.

For a full checklist of JavaScript web optimization frequent errors and greatest practices, you may navigate to the JavaScript web optimization information for web optimization professionals and builders.

→ Learn extra: 6 JavaScript Optimization Ideas From Google

Lesson #3: Crawl Funds Issues, However Solely If Your Web site Is Big

- Lesson: You need to solely fear concerning the crawl price range in case your web site has a whole bunch of 1000’s or tens of millions of pages.

Crawl price range refers to what number of pages a search engine like Google crawls in your website inside a sure timeframe. It’s decided by two foremost components:

- Crawl capability restrict: This prevents Googlebot from overwhelming your server with too many simultaneous requests.

- Crawl demand: That is based mostly in your website’s recognition and the way typically content material adjustments.

It doesn’t matter what you hear or learn on the web, most web sites don’t have to stress about crawl price range in any respect. Google usually handles crawling effectively for smaller web sites.

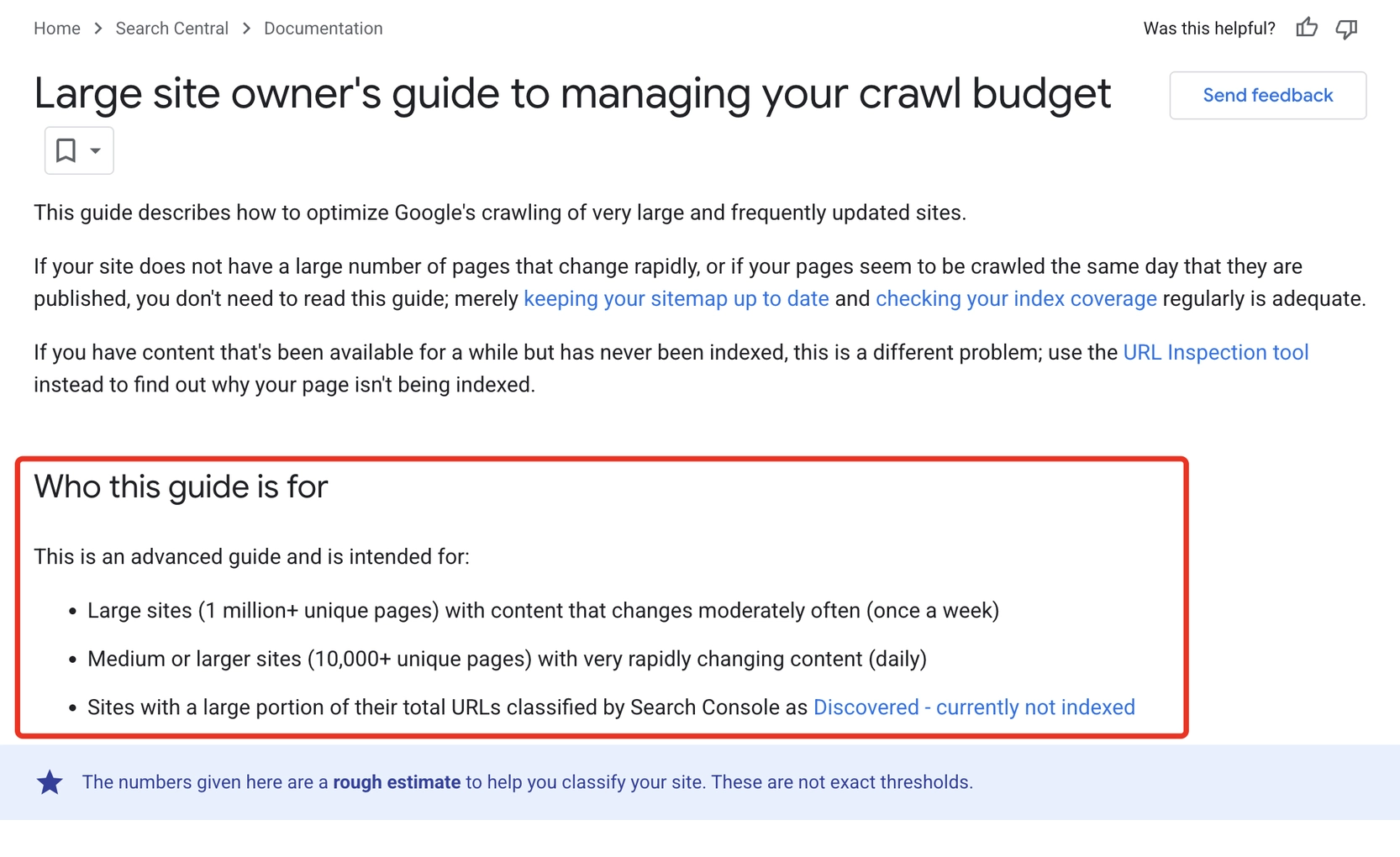

However for enormous web sites – particularly these with tens of millions of URLs or daily-changing content material – crawl price range turns into important (as Google confirms in its crawl price range documentation).

Google, in its documentation, clearly defines what kinds of web sites needs to be involved about crawl price range. (Screenshot from Search Central, April 2025)

Google, in its documentation, clearly defines what kinds of web sites needs to be involved about crawl price range. (Screenshot from Search Central, April 2025)On this case, it’s good to make sure that Google prioritizes and crawls vital pages incessantly with out losing sources on pages that ought to by no means be crawled or listed.

You possibly can examine your crawl price range well being utilizing Google Search Console’s Indexing report. Take note of:

- Crawled – Presently Not Listed: This often signifies indexing issues, not crawl price range.

- Found – Presently Not Listed: This usually indicators crawl price range points.

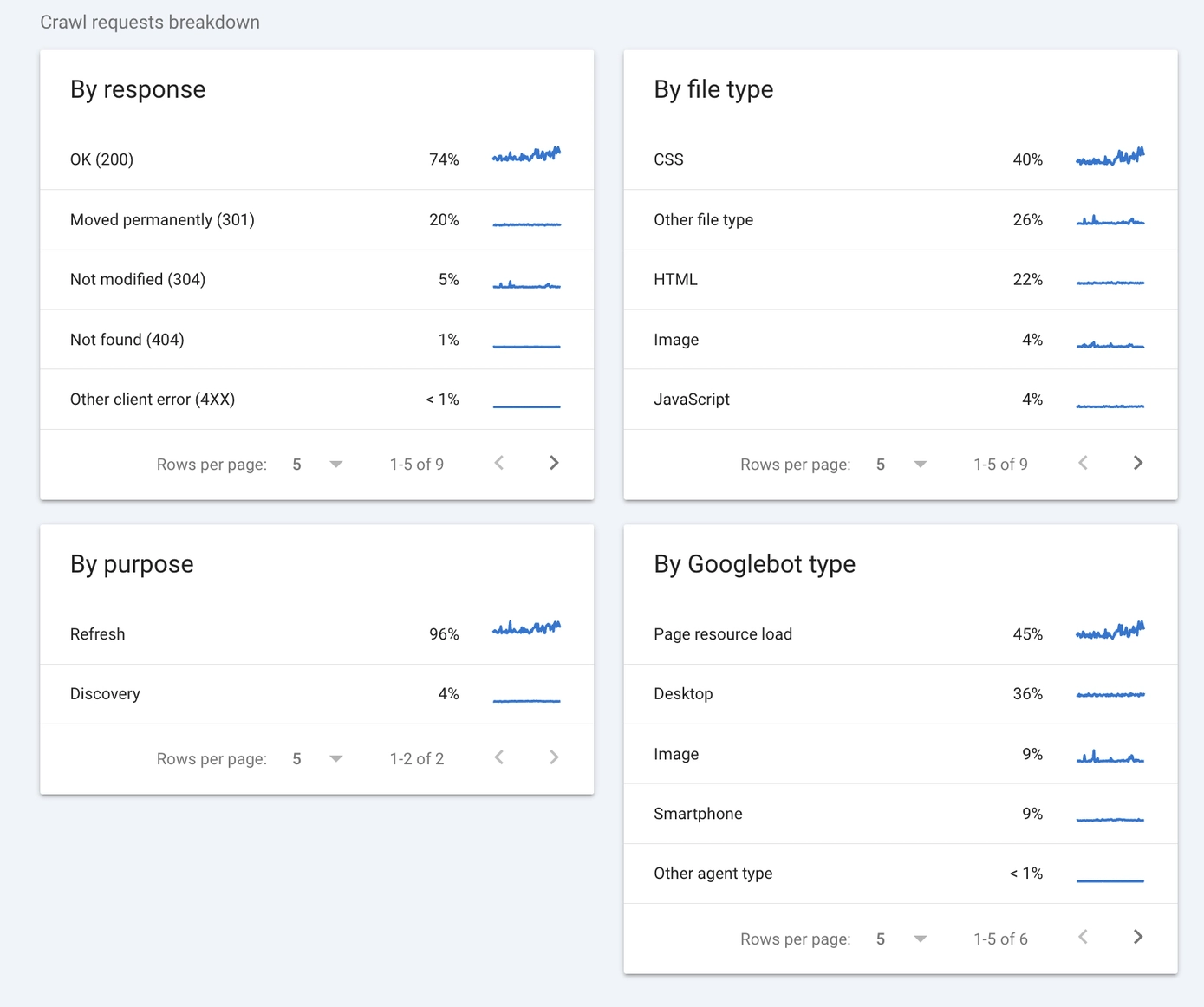

You also needs to frequently overview Google Search Console’s Crawl Stats report back to see what number of pages Google crawls per day. Evaluating crawled pages with complete pages in your website helps you see inefficiencies.

Whereas these fast checks in GSC naturally received’t exchange log file evaluation, they’ll give fast insights into potential crawl price range points and should recommend {that a} detailed log file evaluation could also be vital.

→ Learn extra: 9 Ideas To Optimize Crawl Funds For web optimization

This brings us to the following level.

Lesson #4: Log File Evaluation Lets You See The Total Image

- Lesson: Log file evaluation is a should for a lot of web sites. It reveals particulars you may’t see in any other case and helps diagnose issues with crawlability and indexability that have an effect on your website’s means to rank.

Log information monitor each go to from search engine bots, like Googlebot or Bingbot. They present which pages are crawled, how typically, and what the bots do. This knowledge allows you to spot points and determine the way to repair them.

For instance, on an ecommerce website, you would possibly discover Googlebot crawling product pages, including objects to the cart, and eradicating them, losing your crawl price range on ineffective actions.

With this perception, you may block these cart-related URLs with parameters to avoid wasting sources in order that Googlebot can crawl and index precious, indexable canonical URLs.

Right here is how one can make use of log file evaluation:

- Begin by accessing your server entry logs, which report bot exercise.

- Take a look at what pages bots hit most, how incessantly they go to, and in the event that they’re caught on low-value URLs.

- You don’t want to research logs manually. Instruments like Screaming Frog Log File Analyzer make it simple to establish patterns shortly.

- Should you discover points, like bots repeatedly crawling URLs with parameters, you may simply replace your robots.txt file to dam these pointless crawls

Getting log information isn’t all the time simple, particularly for giant enterprise websites the place server entry may be restricted.

If that’s the case, you should utilize the aforementioned Google Search Console’s Crawl Stats, which offers precious insights into Googlebot’s crawling exercise, together with pages crawled, crawl frequency, and response occasions.

The Google Search Console Crawl Stats report offers a pattern of information about Google’s crawling exercise. (Screenshot from Google Search Console, April 2025)

The Google Search Console Crawl Stats report offers a pattern of information about Google’s crawling exercise. (Screenshot from Google Search Console, April 2025)Whereas log information supply probably the most detailed view of search engine interactions, even a fast examine in Crawl Stats helps you see points you would possibly in any other case miss.

→ Learn extra: 14 Should-Know Ideas For Crawling Thousands and thousands Of Webpages

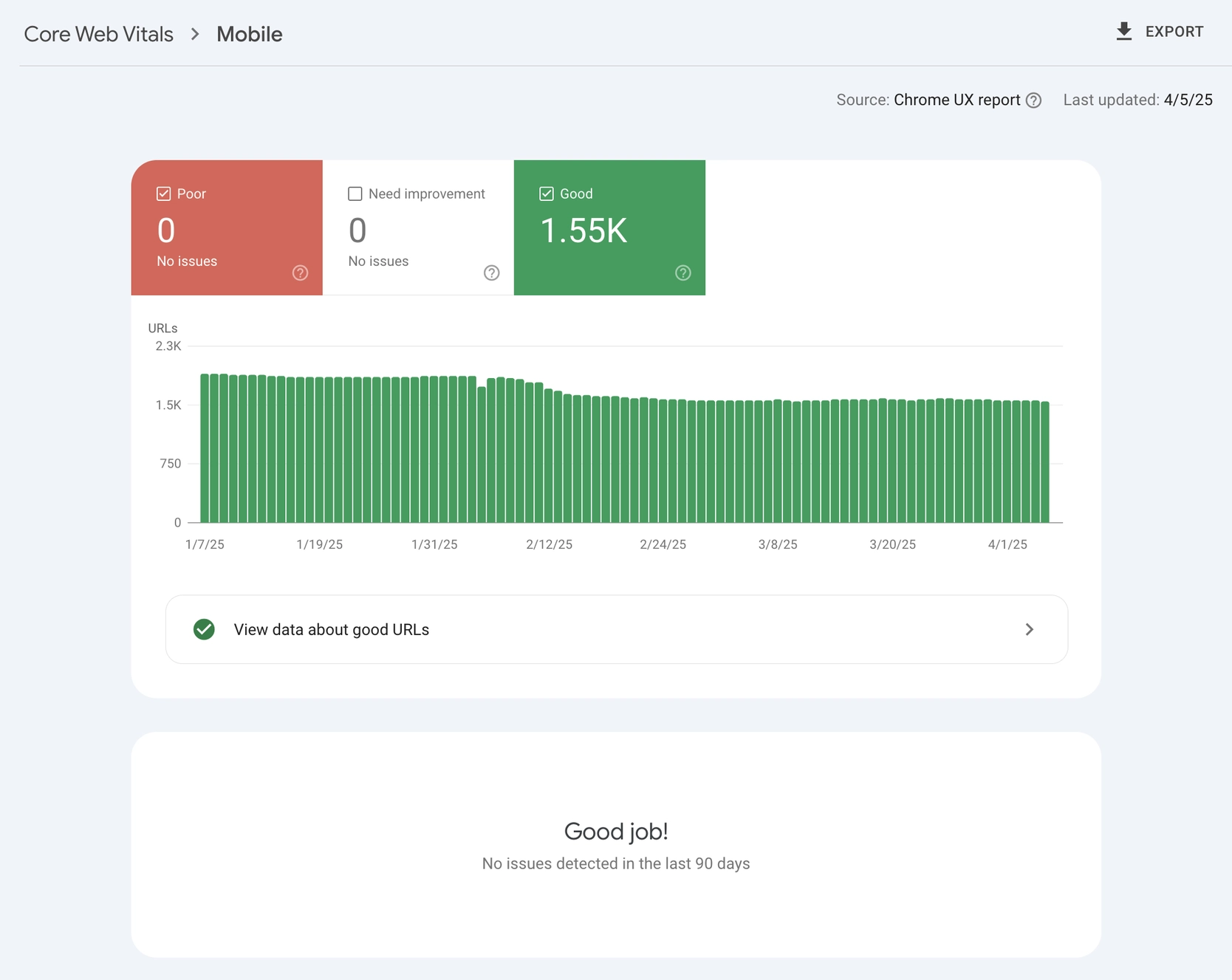

Lesson #5: Core Net Vitals Are Overrated. Cease Obsessing Over Them

- Lesson: You need to focus much less on Core Net Vitals. They hardly ever make or break web optimization outcomes.

Core Net Vitals measure loading pace, interactivity, and visible stability, however they don’t affect web optimization as considerably as many assume.

After auditing over 500 web sites, I’ve hardly ever seen Core Net Vitals alone considerably enhance rankings.

Most websites solely see measurable enchancment if their loading occasions are extraordinarily poor – taking greater than 30 seconds – or have important points flagged in Google Search Console (the place all the things is marked in purple).

The Core Net Vitals report in Google Search Console offers real-world person knowledge. (Screenshot from Google Search Console, April 2025)

The Core Net Vitals report in Google Search Console offers real-world person knowledge. (Screenshot from Google Search Console, April 2025)I’ve watched purchasers spend 1000’s, even tens of 1000’s of {dollars}, chasing excellent Core Net Vitals scores whereas overlooking elementary web optimization fundamentals, equivalent to content material high quality or key phrase technique.

Redirecting these sources towards content material and foundational web optimization enhancements often yields manner higher outcomes.

When evaluating Core Net Vitals, it is best to focus completely on real-world knowledge from Google Search Console (versus lab knowledge in Google PageSpeed Insights) and think about customers’ geographic places and typical web speeds.

In case your customers dwell in city areas with dependable high-speed web, Core Net Vitals received’t have an effect on them a lot. But when they’re rural customers on slower connections or older gadgets, website pace and visible stability turn out to be important.

The underside line right here is that it is best to all the time base your resolution to optimize Core Net Vitals in your particular viewers’s wants and actual person knowledge – not simply business tendencies.

→ Learn extra: Are Core Net Vitals A Rating Issue?

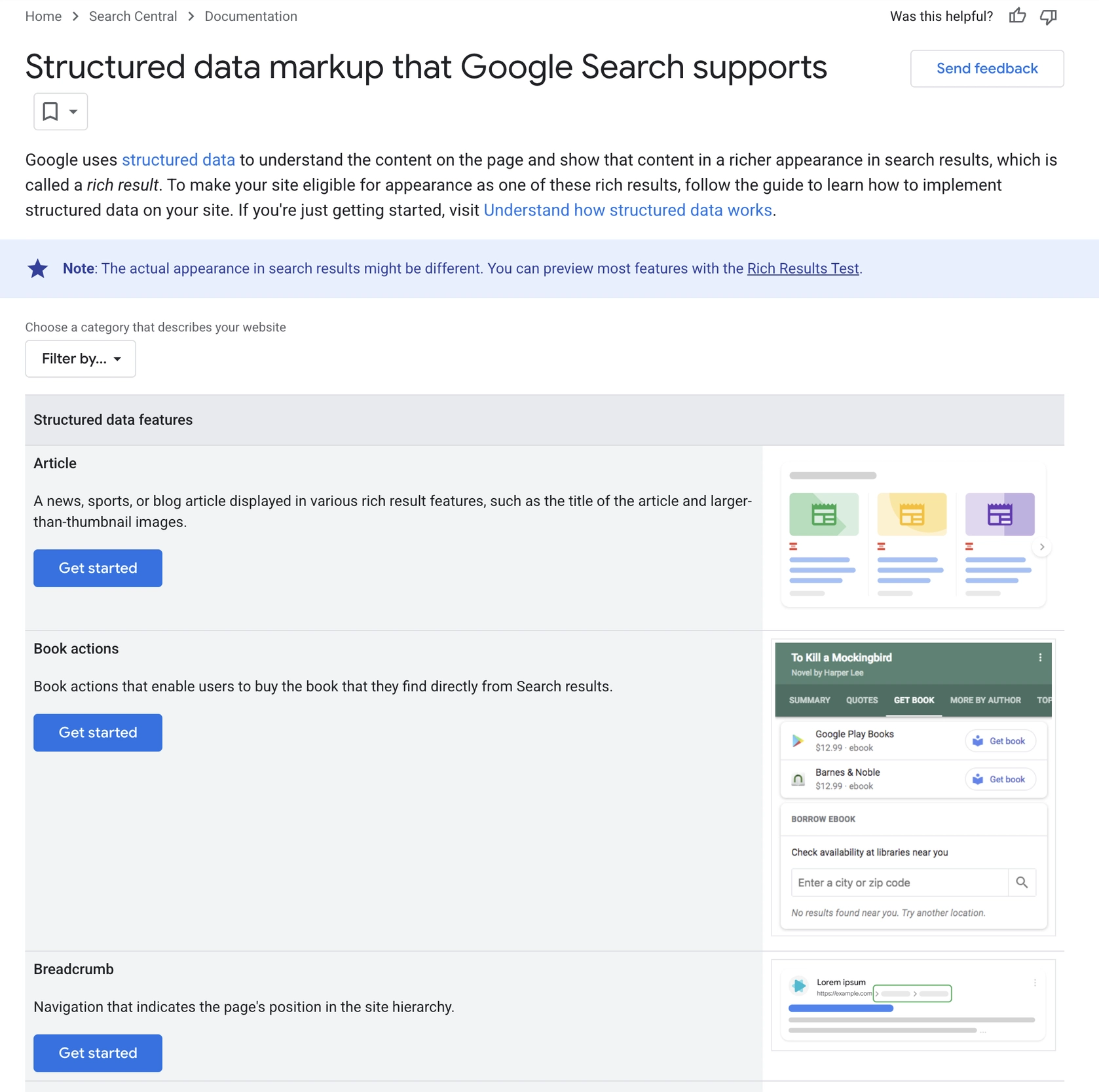

Lesson #6: Use Schema (Structured Information) To Assist Google Perceive & Belief You

- Lesson: You need to use structured knowledge (Schema) to inform Google who you might be, what you do, and why your web site deserves belief and visibility.

Schema Markup (or structured knowledge) explicitly defines your content material’s that means, which helps Google simply perceive the primary subject and context of your pages.

Sure schema sorts, like wealthy outcomes markup, permit your listings to show further particulars, equivalent to star scores, occasion info, or product costs. These “wealthy snippets” can seize consideration in search outcomes and enhance click-through charges.

You possibly can consider schema as informative labels for Google. You possibly can label nearly something – merchandise, articles, critiques, occasions – to obviously clarify relationships and context. This readability helps search engines like google and yahoo perceive why your content material is related for a given question.

You need to all the time select the right schema kind (like “Article” for weblog posts or “Product” for e-commerce pages), implement it correctly with JSON-LD, and thoroughly check it utilizing Google’s Wealthy Outcomes Take a look at or Structured Information Testing Device.

In its documentation, Google exhibits examples of structured knowledge markup supported by Google Search. (Screenshot from Google Search Console, April 2025)

In its documentation, Google exhibits examples of structured knowledge markup supported by Google Search. (Screenshot from Google Search Console, April 2025)Schema allows you to optimize web optimization behind the scenes with out affecting what your viewers sees.

Whereas web optimization purchasers typically hesitate about altering seen content material, they often really feel comfy including structured knowledge as a result of it’s invisible to web site guests.

→ Learn extra: CMO Information To Schema: How Your Group Can Implement A Structured Information Technique

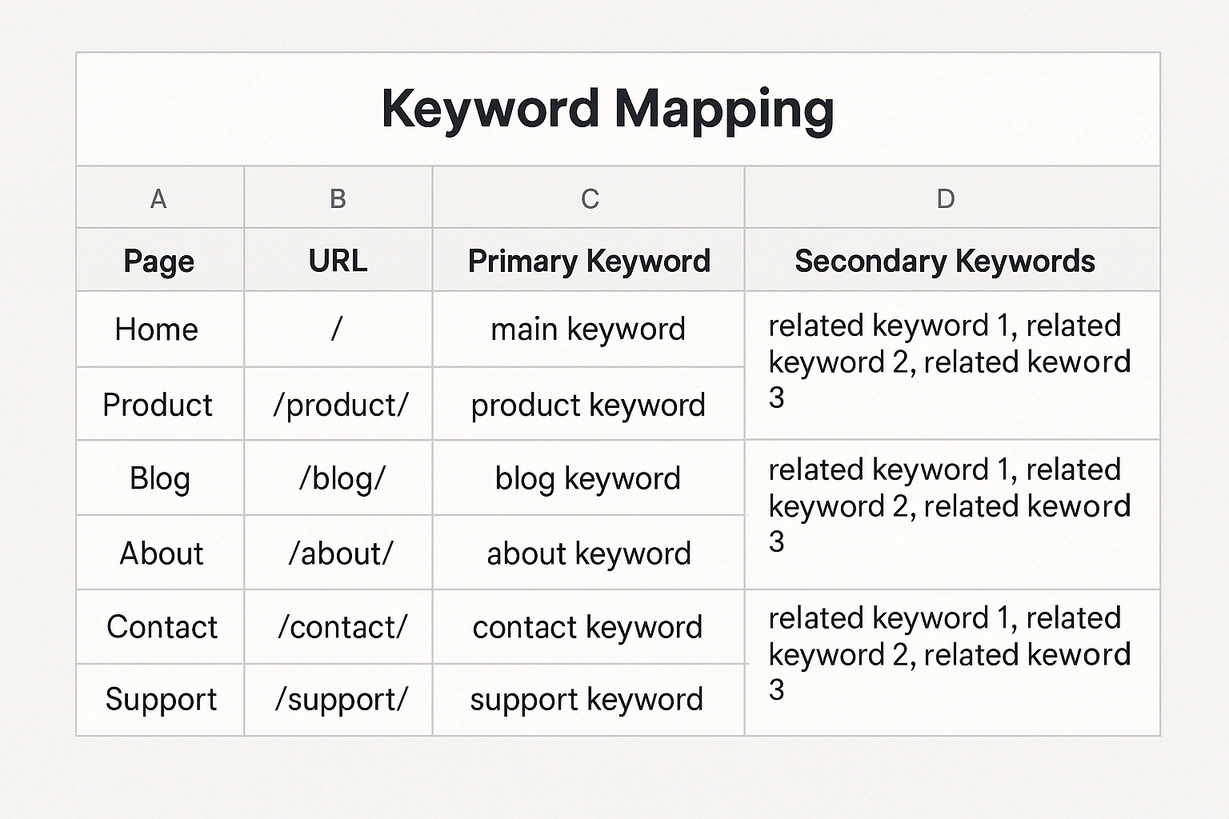

Lesson #7: Key phrase Analysis And Mapping Are All the things

- Lesson: Technical web optimization will get you into the sport by controlling what search engines like google and yahoo can crawl and index. However, the following step – key phrase analysis and mapping – tells them what your website is about and the way to rank it.

Too typically, web sites chase the most recent web optimization tips or goal broad, aggressive key phrases with none strategic planning. They skip correct key phrase analysis and barely put money into key phrase mapping, each important steps to long-term web optimization success:

- Key phrase analysis identifies the precise phrases and phrases your viewers truly makes use of to go looking.

- Key phrase mapping assigns these researched phrases to particular pages and offers every web page a transparent, targeted goal.

Each web site ought to have a spreadsheet itemizing all its indexable canonical URLs.

Subsequent to every URL, there needs to be the primary key phrase that the web page ought to goal, plus a couple of associated synonyms or variations.

Having the key phrase mapping doc is a crucial component of any web optimization technique. (Picture from creator, April 2025)

Having the key phrase mapping doc is a crucial component of any web optimization technique. (Picture from creator, April 2025)With out this construction, you’ll be guessing and hoping your pages rank for phrases that will not even match your content material.

A transparent key phrase map ensures each web page has an outlined position, which makes your complete web optimization technique more practical.

This isn’t busywork; it’s the inspiration of a stable web optimization technique.

→ Learn extra: How To Use ChatGPT For Key phrase Analysis

Lesson #8: On-Web page web optimization Accounts For 80% Of Success

- Lesson: From my expertise auditing a whole bunch of internet sites, on-page web optimization drives about 80% of web optimization outcomes. But, solely about 1 in 20 or 30 websites I overview have accomplished it properly. Most get it unsuitable from the beginning.

Many web sites rush straight into hyperlink constructing, producing a whole bunch and even 1000’s of low-quality backlinks with exact-match anchor texts, earlier than laying any web optimization groundwork.

They skip important key phrase analysis, overlook key phrase mapping, and fail to optimize their key pages first.

I’ve seen this again and again: chasing superior or shiny techniques whereas ignoring the fundamentals that really work.

When your technical web optimization basis is robust, specializing in on-page web optimization can typically ship vital outcomes.

There are millions of articles about fundamental on-page web optimization: optimizing titles, headers, and content material round focused key phrases.

But, nearly no person implements all of those fundamentals appropriately. As an alternative of chasing fashionable or advanced techniques, it is best to focus first on the necessities:

- Do correct key phrase analysis to establish phrases your viewers truly searches.

- Map these key phrases clearly to particular pages.

- Optimize every web page’s title tags, meta descriptions, headers, pictures, inside hyperlinks, and content material accordingly.

These simple steps are sometimes sufficient to attain web optimization success, but many overlook them whereas looking for difficult shortcuts.

→ Learn extra: Google E-E-A-T: What Is It & How To Display It For web optimization

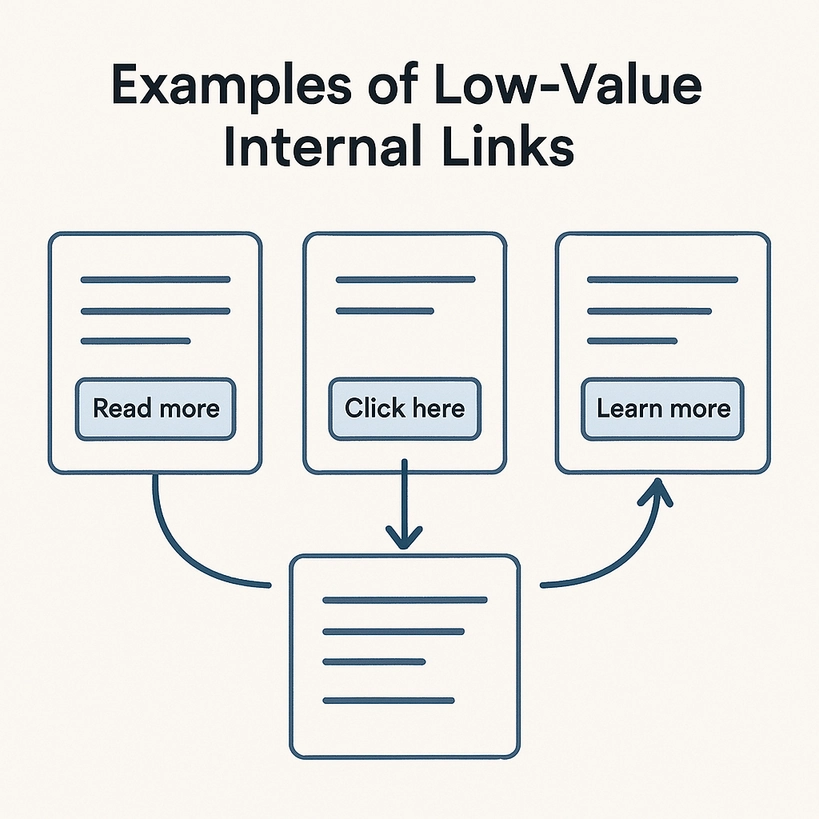

Lesson #9: Inside Linking Is An Underused However Highly effective web optimization Alternative

- Lesson: Inside hyperlinks maintain extra energy than overhyped exterior backlinks and may considerably make clear your website’s construction for Google.

Inside hyperlinks are far more highly effective than most web site house owners notice.

Everybody talks about backlinks from exterior websites, however inside linking – when accomplished appropriately – can truly make a huge effect.

Except your web site is model new, enhancing your inside linking may give your web optimization a severe elevate by serving to Google clearly perceive the subject and context of your website and its particular pages.

Nonetheless, many web sites don’t use inside hyperlinks successfully. They rely closely on generic anchor texts like “Learn extra” or “Study extra,” which inform search engines like google and yahoo completely nothing concerning the linked web page’s content material.

Picture from creator, April 2025

Picture from creator, April 2025Web site house owners typically strategy me satisfied they want a deep technical audit.

But, after I take a better look, their actual situation incessantly seems to be poor inside linking or unclear web site construction, each making it tougher for Google to know the location’s content material and worth.

Inside linking may improve underperforming pages.

For instance, when you’ve got a web page with robust exterior backlinks, linking internally from that high-authority web page to weaker ones can go authority and assist these pages rank higher.

Investing slightly further time in enhancing your inside hyperlinks is all the time price it. They’re one of many best but strongest web optimization instruments you have got.

→ Learn extra: Inside Hyperlink Construction Greatest Practices to Increase Your web optimization

Lesson #10: Backlinks Are Simply One web optimization Lever, Not The Solely One

- Lesson: You need to by no means blindly chase backlinks to repair your web optimization. Construct them strategically solely after mastering the fundamentals.

web optimization audits typically present web sites inserting an excessive amount of emphasis on backlinks whereas neglecting many different important web optimization alternatives.

Blindly constructing backlinks with out first protecting web optimization fundamentals – like eradicating technical web optimization blockages, doing thorough key phrase analysis, and mapping clear key phrases to each web page – is a typical and expensive mistake.

Even after getting these fundamentals proper, hyperlink constructing ought to by no means be random or reactive.

Too typically, I see websites begin constructing backlinks just because their web optimization isn’t progressing, hoping extra hyperlinks will magically assist. This hardly ever works.

As an alternative, it is best to all the time strategy hyperlink constructing strategically, by first rigorously analyzing your direct SERP rivals to find out if backlinks are genuinely your lacking component:

- Look carefully on the pages outranking you.

- Establish whether or not their benefit actually comes from backlinks or higher on-page optimization, content material high quality, or inside linking.

The choice on whether or not or to not construct backlinks needs to be based mostly on whether or not direct rivals have extra and higher backlinks. (Picture from creator, April 2025)

The choice on whether or not or to not construct backlinks needs to be based mostly on whether or not direct rivals have extra and higher backlinks. (Picture from creator, April 2025)Solely after guaranteeing your on-page web optimization and inside hyperlinks are robust and confirming that backlinks are certainly the differentiating issue, do you have to put money into focused hyperlink constructing.

Sometimes, you don’t want a whole bunch of low-quality backlinks. Typically, only a few strategic editorial hyperlinks or well-crafted web optimization press releases can shut the hole and enhance your rankings.

→ Learn extra: How To Get High quality Backlinks: 11 Methods That Actually Work

Lesson #11: web optimization Instruments Alone Can’t Substitute Handbook web optimization Checks

- Lesson: You need to by no means belief web optimization instruments blindly. At all times cross-check their findings manually utilizing your personal judgment and customary sense.

web optimization instruments make our work quicker, simpler, and extra environment friendly, however they nonetheless can’t totally replicate human evaluation or perception.

Instruments lack the flexibility to know context and technique in the way in which that web optimization professionals do. They often can’t “join the dots” or assess the true significance of sure findings.

That is precisely why each advice supplied by a software wants handbook verification. You need to all the time consider the severity and real-world impression of the difficulty your self.

Typically, web site house owners come to me alarmed by “deadly” errors flagged by their web optimization instruments.

But, after I manually examine these points, most turn into minor or irrelevant.

In the meantime, elementary points of web optimization, equivalent to strategic key phrase concentrating on or on-page optimization, are utterly lacking since no software can totally seize these nuances.

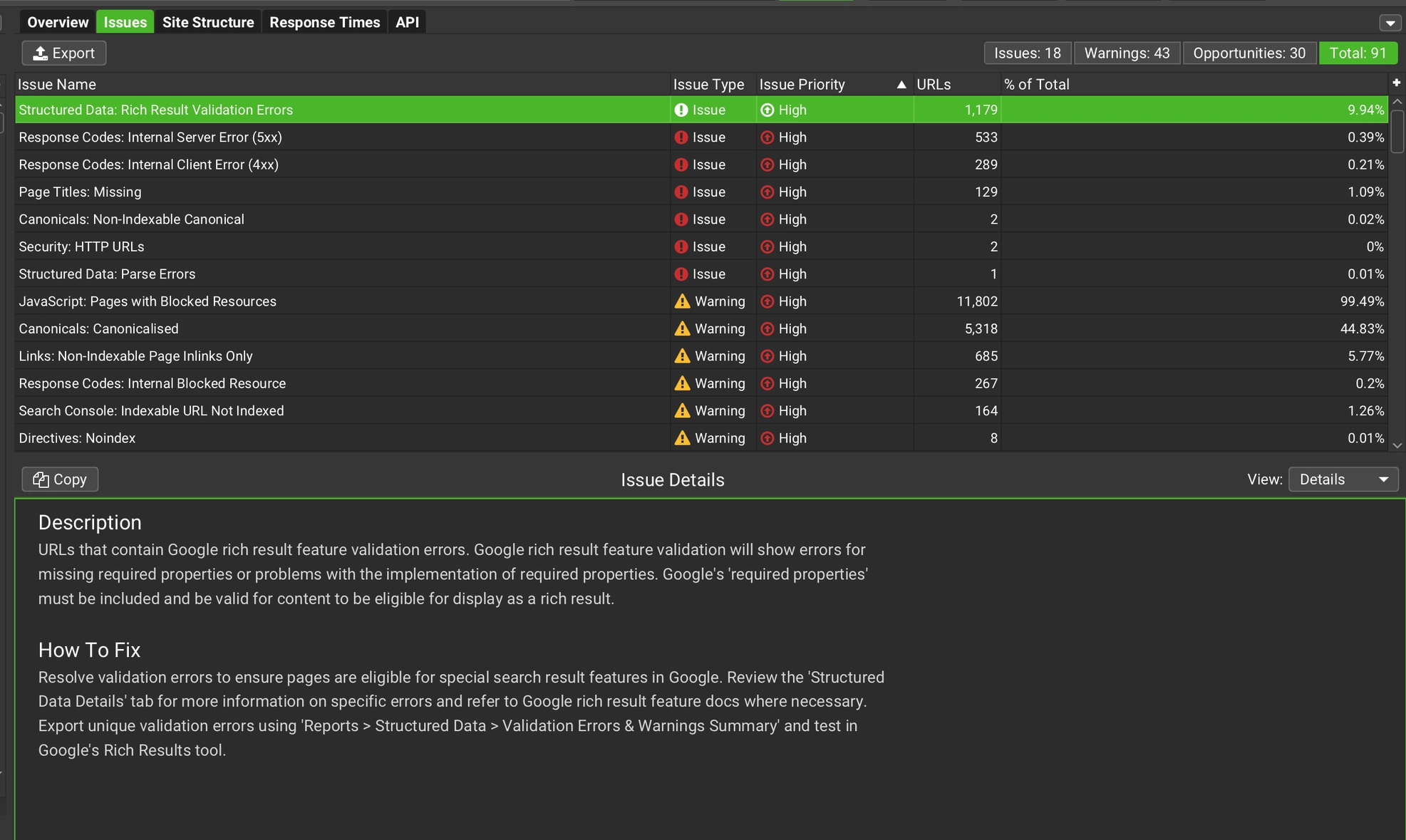

Screaming Frog web optimization Spider says there are wealthy consequence validation errors, however after I examine that manually, there are not any errors. (Screenshot from Screaming Frog, April 2025)

Screaming Frog web optimization Spider says there are wealthy consequence validation errors, however after I examine that manually, there are not any errors. (Screenshot from Screaming Frog, April 2025)web optimization instruments are nonetheless extremely helpful as a result of they deal with large-scale checks that people can’t simply carry out, like analyzing tens of millions of URLs without delay.

Nonetheless, it is best to all the time interpret their findings rigorously and manually confirm the significance and precise impression earlier than taking any motion.

Closing Ideas

After auditing a whole bunch of internet sites, the most important sample I discover isn’t advanced technical web optimization points, although they do matter.

As an alternative, probably the most frequent and vital drawback is just an absence of a transparent, prioritized web optimization technique.

Too typically, web optimization is completed and not using a stable basis or clear path, which makes all different efforts much less efficient.

One other frequent situation is undiagnosed technical issues lingering from outdated website migrations or updates. These hidden issues can quietly harm rankings for years if left unresolved.

The teachings above cowl nearly all of challenges I encounter day by day, however keep in mind: Every web site is exclusive. There’s no one-size-fits-all guidelines.

Each audit should be personalised and think about the location’s particular context, viewers, objectives, and limitations.

web optimization instruments and AI are more and more useful, however they’re nonetheless simply instruments. In the end, your personal human judgment, expertise, and customary sense stay probably the most important components in efficient web optimization.

Extra Assets:

Featured Picture: inspiring.crew/Shutterstock