Many are conscious of the favored Chain of Ideas (CoT) methodology of prompting generative AI with a view to acquire higher and extra subtle responses. Researchers from Google DeepMind and Princeton College developed an improved prompting technique referred to as Tree of Ideas (ToT) that takes prompting to a better stage of outcomes, unlocking extra subtle reasoning strategies and higher outputs.

The researchers clarify:

“We present how deliberate search in bushes of ideas (ToT) produces higher outcomes, and extra importantly, fascinating and promising new methods to make use of language fashions to resolve issues requiring search or planning.”

Researchers Examine Towards Three Sorts Of Prompting

The analysis paper compares ToT towards three different prompting methods.

1. Enter-output (IO) Prompting

That is principally giving the language mannequin an issue to resolve and getting the reply.

An instance based mostly on textual content summarization is:

Enter Immediate: Summarize the next article.

Output Immediate: Abstract based mostly on the article that was enter

2. Chain Of Thought Prompting

This type of prompting is the place a language mannequin is guided to generate coherent and linked responses by encouraging it to comply with a logical sequence of ideas. Chain-of-Thought (CoT) Prompting is a approach of guiding a language mannequin via the intermediate reasoning steps to resolve issues.

Chain Of Thought Prompting Instance:

Query: Roger has 5 tennis balls. He buys 2 extra cans of tennis balls. Every can has 3 tennis balls. What number of tennis balls does he have now?

Reasoning: Roger began with 5 balls. 2 cans of three tennis balls every is 6 tennis balls. 5 + 6 = 11. The reply: 11Query: The cafeteria had 23 apples. In the event that they used 20 to make lunch and purchased 6 extra, what number of apples have they got?

3. Self-consistency with CoT

In easy phrases, this can be a prompting technique of prompting the language mannequin a number of instances then selecting probably the most generally arrived at reply.

The analysis paper on Sel-consistency with CoT from March 2023 explains it:

“It first samples a various set of reasoning paths as a substitute of solely taking the grasping one, after which selects probably the most constant reply by marginalizing out the sampled reasoning paths. Self-consistency leverages the instinct {that a} advanced reasoning drawback usually admits a number of alternative ways of considering resulting in its distinctive appropriate reply.”

Twin Course of Fashions in Human Cognition

The researchers take inspiration from a concept of how human choice considering referred to as twin course of fashions in human cognition or twin course of concept.

Twin course of fashions in human cognition proposes that people interact in two sorts of decision-making processes, one that’s intuitive and quick and one other that’s extra deliberative and slower.

- Quick, Computerized, Unconscious

This mode entails quick, computerized, and unconscious considering that’s usually stated to be based mostly on instinct. - Sluggish, Deliberate, Acutely aware

This mode of decision-making is a sluggish, deliberate, and acutely aware considering course of that entails cautious consideration, evaluation, and step-by-step reasoning earlier than selecting a remaining choice.

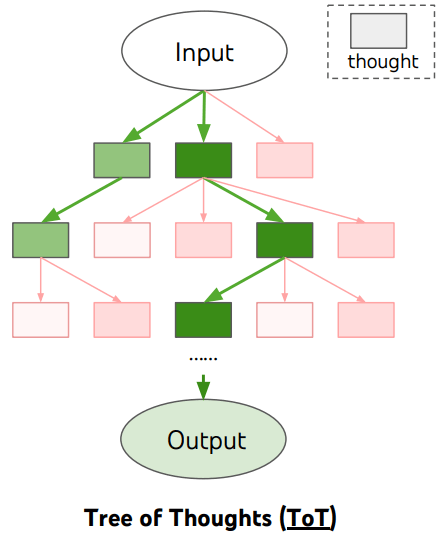

The Tree of Ideas (ToT) prompting framework makes use of a tree construction of every step of the reasoning course of that enables the language mannequin to judge every reasoning step and resolve whether or not or not that step within the reasoning is viable and result in a solution. If the language mannequin decides that the reasoning path won’t result in a solution the prompting technique requires it to desert that path (or department) and preserve shifting ahead with one other department, till it reaches the ultimate consequence.

Tree Of Ideas (ToT) Versus Chain of Ideas (CoT)

The distinction between ToT and and CoT is that ToT is has a tree and department framework for the reasoning course of whereas CoT takes a extra linear path.

In easy phrases, CoT tells the language mannequin to comply with a sequence of steps with a view to accomplish a activity, which resembles the system 1 cognitive mannequin that’s quick and computerized.

ToT resembles the system 2 cognitive mannequin that’s extra deliberative and tells the language mannequin to comply with a sequence of steps however to even have an evaluator step in and overview every step and if it’s a very good step to maintain going and if to not cease and comply with one other path.

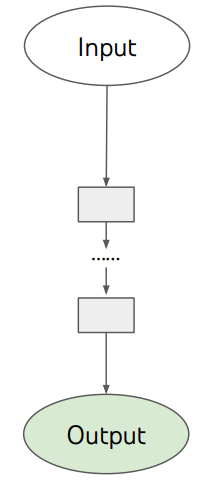

Illustrations Of Prompting Methods

The analysis paper printed schematic illustrations of every prompting technique, with rectangular packing containers that characterize a “thought” inside every step towards finishing the duty, fixing an issue.

The next is a screenshot of what the reasoning course of for ToT seems to be like:

Illustration of Chain of Although Prompting

That is the schematic illustration for CoT, exhibiting how the thought course of is extra of a straight path (linear):

The analysis paper explains:

“Analysis on human problem-solving suggests that folks search via a combinatorial drawback house – a tree the place the nodes characterize partial options, and the branches correspond to operators

that modify them. Which department to take is set by heuristics that assist to navigate the problem-space and information the problem-solver in direction of an answer.This attitude highlights two key shortcomings of current approaches that use LMs to resolve basic issues:

1) Domestically, they don’t discover totally different continuations inside a thought course of – the branches of the tree.

2) Globally, they don’t incorporate any sort of planning, lookahead, or backtracking to assist consider these totally different choices – the form of heuristic-guided search that appears attribute of human problem-solving.

To handle these shortcomings, we introduce Tree of Ideas (ToT), a paradigm that enables LMs to discover a number of reasoning paths over ideas…”

Examined With A Mathematical Sport

The researchers examined the tactic utilizing a Sport of 24 math recreation. Sport of 24 is a mathematical card recreation the place gamers use 4 numbers (that may solely be used as soon as) from a set of playing cards to mix them utilizing primary arithmetic (addition, subtraction, multiplication, and division) to attain a results of 24.

Outcomes and Conclusions

The researchers examined the ToT prompting technique towards the three different approaches and located that it produced constantly higher outcomes.

Nonetheless additionally they notice that ToT might not be mandatory for finishing duties that GPT-4 already does effectively at.

They conclude:

“The associative “System 1” of LMs will be beneficially augmented by a “System 2″ based mostly on looking out a tree of doable paths to the answer to an issue.

The Tree of Ideas framework gives a approach to translate classical insights about problem-solving into actionable strategies for modern LMs.

On the identical time, LMs handle a weak point of those classical strategies, offering a approach to remedy advanced issues that aren’t simply formalized, equivalent to inventive

writing.We see this intersection of LMs with classical approaches to AI as an thrilling course.”

Learn the unique analysis paper:

Tree of Ideas: Deliberate Drawback Fixing with Giant Language Fashions

Featured Picture by Shutterstock/Asier Romero