In case you’ve all the time been in awe of parents utilizing the Google Search Console API to do cool issues, this text is an efficient learn for you.

You should utilize BigQuery with the GSC bulk knowledge export to get a few of the similar advantages with out requiring the assistance of a developer.

With BigQuery, you possibly can effectively analyze giant volumes of information from the GSC bulk knowledge export.

You received’t have real-time knowledge retrieval; that’s accessible with the API in our situation however you possibly can depend on each day knowledge imports which implies that you’re working with up-to-date info.

By leveraging BigQuery and the GSC bulk knowledge export, you possibly can entry complete search analytics knowledge – that’s the half you hear everybody raving about on LinkedIn.

Based on Gus Pelogia, website positioning product supervisor at Certainly:

“It’s such a recreation changer and an important alternative to study SQL. We are able to lastly bypass GSC and exterior website positioning instruments limitations. I used to be shocked to see how easy it was to retrieve knowledge.”

A Structured Strategy To Utilizing BigQuery And Google Search Console (GSC) Information For Content material Efficiency Evaluation

The intention of this text is to not give you an extended listing of queries or a large step-by-step blueprint of learn how to conduct probably the most intense audit of all time.

I intention to make you are feeling extra snug stepping into the groove of analyzing knowledge with out the restrictions that include the Google Search Console interface. To do that, you might want to contemplate 5 steps:

- Determine use instances.

- Determine related metrics for every use case.

- Question the info.

- Create a looker studio report to assist stakeholders and groups perceive your evaluation.

- Automate reporting.

The problem we frequently face when getting began with BigQuery is that all of us wish to question the info instantly. However that’s not sufficient.

The true worth you possibly can convey is by having a structured strategy to your knowledge evaluation.

1. Determine Use Circumstances

It’s typically really helpful that you recognize your knowledge earlier than you determine what you wish to analyze. Whereas that is true, on this case, it will likely be limiting you.

We suggest you begin by figuring out the precise objective and targets for analyzing content material efficiency.

Use Case #1: Determine The Queries And Pages That Deliver The Most Clicks

“I imagine that each high-quality website positioning audit must also analyze the positioning’s visibility and efficiency in search. When you determine these areas, you’ll know what to deal with in your audit suggestions.”

Mentioned Olga Zarr in her “How you can audit a website with Google Search Console” information.

To try this, you need the queries and the pages that convey probably the most clicks.

Use Case #2: Calculating UQC

If you wish to spot weak areas or alternatives, calculating the Distinctive Question Depend (UQC) per web page presents worthwhile insights.

You already know this since you use this kind of evaluation in website positioning instruments like Semrush, SE Rating, Dragon Metrics, or Serpstat (the latter has an important information on How you can Use Google Search Console to Create Content material Plans).

Nevertheless, it’s extremely helpful to recreate this with your personal Google Search Console knowledge. You possibly can automate and replicate the method regularly.

There are advantages to this:

- It helps determine which pages are attracting a various vary of search queries and which of them could also be extra targeted on particular matters.

- Pages with a excessive UQC might current alternatives for additional optimization or enlargement to capitalize on a wider vary of search queries.

- Analyzing the UQC per web page can even reveal which place bands (e.g., positions 1-3, 4-10, and many others.) show extra variability by way of the variety of distinctive queries. This might help prioritize optimization efforts.

- Understanding how UQC fluctuates all year long can inform content material planning and optimization methods to align with seasonal traits and capitalize on peak intervals of search exercise.

- Evaluating UQC traits throughout totally different time intervals allows you to gauge the effectiveness of content material optimization efforts and determine areas for additional enchancment.

Use case #3: Assessing The Content material Danger

Jess Joyce, B2B & SaaS website positioning skilled has a income producing content material optimization framework she shares with purchasers.

One of many vital steps is discovering pages that noticed a decline in clicks and impressions quarter over quarter. She depends on Search Console knowledge to take action.

Constructing this question could be nice however earlier than we soar into this, we have to assess the content material danger.

In case you calculate the proportion of complete clicks contributed by the highest 1% of pages on a web site based mostly on the variety of clicks every web page receives, you possibly can rapidly pinpoint if you’re within the hazard zone – which means if there are potential dangers related to over-reliance on a small subset of pages.

Right here’s why this issues:

- Over-reliance on a small subset of pages will be dangerous because it reduces the diversification of site visitors throughout the web site, making it susceptible to fluctuations or declines in site visitors to these particular pages.

- Assessing the hazard zone: A proportion worth over 40% signifies a excessive reliance on the highest 1% of pages for natural site visitors, suggesting a possible danger.

- This question gives worthwhile perception into the distribution of natural site visitors throughout a web site.

2. Determine Related Metrics

Analyzing your content material allows you to discern which content material is efficient and which isn’t, empowering you to make data-informed selections.

Whether or not it’s increasing or discontinuing sure content material sorts, leveraging insights out of your knowledge allows you to tailor your content material technique to match your viewers’s preferences.

Metrics and evaluation in content material advertising present the important knowledge for crafting content material that resonates together with your viewers.

Use Case #1: Determine The Queries And Pages That Deliver The Most Clicks

For this use case, you want some fairly simple knowledge.

Let’s listing all of it out right here:

- URLs and/or queries.

- Clicks.

- Impressions.

- Search sort: we solely need internet searches, not photographs or different sorts.

- Over a particular time interval.

The following step is to find out which desk it is best to get this info from. Keep in mind, as we mentioned beforehand, you will have:

- searchdata_site_impression: Accommodates efficiency knowledge to your property aggregated by property.

- searchdata_url_impression: Accommodates efficiency knowledge to your property aggregated by URL.

On this case, you want the efficiency knowledge aggregated by URL, so this implies utilizing the searchdata_url_impression desk.

Use Case #2: Calculating UQC

For this use case, we have to listing what we want as effectively:

- URL: We wish to calculate UQC per web page.

- Question: We wish the queries related to every URL.

- Search Kind: We solely need internet searches, not photographs or different sorts.

- We nonetheless want to choose a desk, on this case, you want the efficiency knowledge aggregated by URL so this implies utilizing the searchdata_url_impression desk.

Use Case #3: Assessing The Content material Danger

To calculate the “clicks contribution of high 1% pages by clicks,” you want the next metrics:

- URL: Used to calculate the clicks contribution.

- Clicks: The variety of clicks every URL has acquired.

- Search Kind: Signifies the kind of search, sometimes ‘WEB’ for internet searches.

- We nonetheless want to choose a desk, on this case, you want the efficiency knowledge aggregated by URL so this implies utilizing the searchdata_url_impression desk. (Narrator voice: discover a pattern? We’re training with one desk which allows you to get very acquainted with it.)

3. Question The Information

Use Case #1: Determine The Queries And Pages That Deliver The Most Clicks

Let’s tie all of it collectively to create a question, lets?

You wish to see pages with probably the most clicks and impressions. This can be a easy code that you would be able to get from Marco Giordano’s BigQuery handbook accessible through his publication.

We’ve got barely modified it to swimsuit our wants and to make sure you hold prices low.

Copy this question to get the pages with probably the most clicks and impressions:

SELECT url, SUM(clicks) as total_clicks, SUM(impressions) as total_impressions FROM `pragm-ga4.searchconsole.searchdata_url_impression` WHERE search_type="WEB" and url NOT LIKE '%#%' AND data_date = "2024-02-13" GROUP BY url ORDER BY total_clicks DESC;

It depends on one of the crucial widespread SQL patterns. It allows you to group by a variable, in our case, URLs. After which, you possibly can choose aggregated metrics you need.

In our case, we specified impressions and clicks so we might be summing up clicks and impressions (two columns).

Let’s break down the question Marco shared:

SELECT assertion

SELECT url, SUM(clicks) as total_clicks, SUM(impressions) as total_impressions: Specifies the columns to be retrieved within the end result set.

- url: Represents the URL of the webpage.

- SUM(clicks) as total_clicks: Calculates the whole variety of clicks for every URL and assigns it an alias total_clicks.

- SUM(impressions) as total_impressions: Calculates the whole variety of impressions for every URL and assigns it an alias total_impressions.

FROM clause

- FROM table_name`pragm-ga4.searchconsole.searchdata_url_impression`: Specifies the desk from which to retrieve the info.

- table_name: Represents the title of the desk containing the related knowledge.

- Vital to know: exchange our desk title together with your desk title.

WHERE clause

- WHERE search_type = ‘WEB’ and url NOT LIKE ‘%#%’: Filters the info based mostly on particular circumstances.

- search_type = ‘WEB’: Ensures that solely knowledge associated to internet search outcomes is included.

- url NOT LIKE ‘%#%’: Excludes URLs containing “#” of their deal with, filtering out anchor hyperlinks inside pages.

- data_date = “2024-02-13”: This situation filters the info to solely embody data for the date ‘2024-02-13’. It ensures that the evaluation focuses solely on knowledge collected on this particular date, permitting for a extra granular examination of internet exercise for that day.

- (Narrator voice: we suggest you choose a date to maintain prices low.)

Vital to know: We suggest you choose two days earlier than at the moment’s date to make sure that you will have knowledge accessible.

GROUP BY clause

- GROUP BY url: Teams the outcomes by the URL column.

- This teams the info in order that the SUM perform calculates complete clicks and impressions for every distinctive URL.

ORDER BY clause

- ORDER BY total_clicks DESC: Specifies the ordering of the end result set based mostly on the total_clicks column in descending order.

- This arranges the URLs within the end result set based mostly on the whole variety of clicks, with the URL having the best variety of clicks showing first.

This question continues to be extra superior than most inexperienced persons would create as a result of it not solely retrieves knowledge from the proper desk but in addition filters it based mostly on particular circumstances (eradicating anchor hyperlinks and search sorts that aren’t solely WEB).

After that, it calculates the whole variety of clicks and impressions for every URL, teams the outcomes by URL, and orders them based mostly on the whole variety of clicks in descending order.

That is why it is best to begin by your use case first, determining metrics second after which writing the question.

Copy this SQL to get the queries in GSC with probably the most clicks and impressions:

SELECT question, SUM(clicks) as total_clicks, SUM(impressions) as total_impressions FROM `pragm-ga4.searchconsole.searchdata_url_impression` WHERE search_type="WEB" AND data_date = "2024-02-13" GROUP BY question ORDER BY total_clicks DESC;

This is identical question, however as an alternative of getting the URL right here, we are going to retrieve the question and combination the info based mostly on this subject. You possibly can see that within the GROUP BY question portion.

The issue with this question is that you’re prone to have a whole lot of “null” outcomes. These are anonymized queries. You possibly can take away these through the use of this question:

SELECT question, SUM(clicks) as total_clicks, SUM(impressions) as total_impressions FROM `pragm-ga4.searchconsole.searchdata_url_impression` WHERE search_type="WEB" AND is_anonymized_query = false AND data_date = "2024-02-13" GROUP BY Question ORDER BY total_clicks DESC;

Now, let’s go one step additional. I like how Iky Tai, website positioning at GlobalShares went about it on LinkedIn. First, you might want to outline what the question does: you possibly can see the high-performing URLs by clicks for a specific date vary.

The SQL question has to retrieve the info from the desired desk, filter it based mostly on a date vary, not a particular date, calculate the whole variety of impressions and clicks for every URL, group the outcomes by URL, and organize them based mostly on the whole variety of clicks in descending order.

Now that that is achieved, we will construct the SQL question:

SELECT url, SUM(impressions) AS impressions, SUM(clicks) AS clicks FROM `pragm-ga4.searchconsole.searchdata_url_impression` WHERE data_date BETWEEN DATE_SUB(CURRENT_DATE(), INTERVAL 3 DAY) AND DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY) GROUP BY url ORDER BY clicks DESC;

Earlier than you copy-paste your technique to glory, take the time to know how that is constructed:

SELECT assertion

- SELECT url, SUM(impressions) AS impressions, SUM(clicks) AS clicks: Specifies the columns to be retrieved within the end result set.

- url: Represents the URL of the webpage.

- SUM(impressions) AS impressions: Calculates the whole variety of impressions for every URL.

- SUM(clicks) AS clicks: Calculates the whole variety of clicks for every URL.

FROM clause

- FROM searchconsole.searchdata_url_impression: Specifies the desk from which to retrieve the info.

- (Narrator voice: You’ll have to exchange the title of your desk.)

- searchconsole.searchdata_url_impression: Represents the dataset and desk containing the search knowledge for particular person URLs.

WHERE clause

- WHERE data_date BETWEEN DATE_SUB(CURRENT_DATE(), INTERVAL 3 DAY) AND DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY): Filters the info based mostly on the date vary.

- data_date: Represents the date when the search knowledge was recorded.

- BETWEEN: Specifies the date vary from three days in the past (INTERVAL 3 DAY) to yesterday (INTERVAL 1 DAY).

- DATE_SUB(CURRENT_DATE(), INTERVAL 3 DAY): Calculates the date three days in the past from the present date.

- DATE_SUB(CURRENT_DATE(), INTERVAL 1 DAY): Calculates yesterday’s date from the present date.

Vital to know: As we mentioned beforehand, you could not have knowledge accessible for the earlier two days. Because of this you could possibly change that interval to say 5 and three days as an alternative of three and in the future.

GROUP BY clause

GROUP BY url: Teams the outcomes by the URL column.

- This teams the info in order that the SUM perform calculates impressions and clicks for every distinctive URL.

ORDER BY clause

ORDER BY clicks DESC: Specifies the ordering of the end result set based mostly on the clicks column in descending order.

- This arranges the URLs within the end result set based mostly on the whole variety of clicks, with the URL having the best variety of clicks showing first.

Vital be aware: when first getting began, I encourage you to make use of an LLM like Gemini or ChatGPT to assist break down queries into chunks you possibly can perceive.

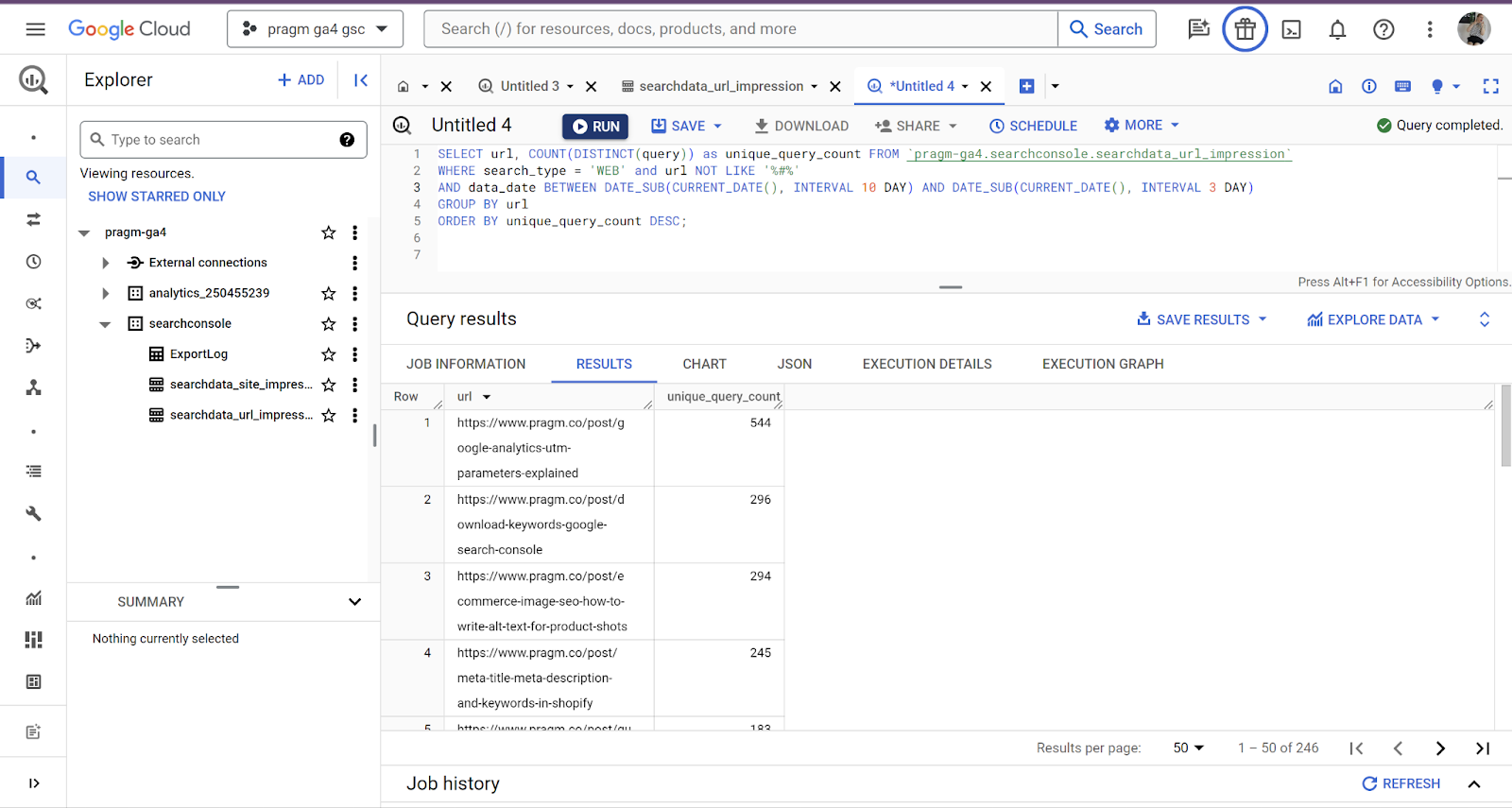

Use Case #2: Calculating UQC

Right here is one other helpful Marco’s handbook that we’ve got modified so as to get you seven days of information (per week’s price):

SELECT url, COUNT(DISTINCT(question)) as unique_query_count FROM `pragm-ga4.searchconsole.searchdata_url_impression` WHERE search_type="WEB" and url NOT LIKE '%#%' AND data_date BETWEEN DATE_SUB(CURRENT_DATE(), INTERVAL 10 DAY) AND DATE_SUB(CURRENT_DATE(), INTERVAL 3 DAY) GROUP BY url ORDER BY unique_query_count DESC;

This time, we won’t break down the question.

This question calculates the Distinctive Question Depend (UQC) per web page by counting the distinct queries related to every URL, excluding URLs containing ‘#’ and filtering for internet searches.

It does that for an interval of seven days whereas bearing in mind knowledge might not be accessible for the 2 earlier days.

The outcomes are then sorted based mostly on the depend of distinctive queries in descending order, offering insights into which pages appeal to a various vary of search queries.

Use Case #3: Assessing The Content material Danger

This question calculates the proportion of complete clicks accounted for by the highest 1% of URLs by way of clicks. This can be a much more superior question than the earlier ones. It’s taken straight from Marco’s Playbook:

WITH PageClicksRanked AS ( SELECT url, SUM(clicks) AS total_clicks, PERCENT_RANK() OVER (ORDER BY SUM(clicks) DESC) AS percent_rank FROM `pragm-ga4.searchconsole.searchdata_url_impression` WHERE search_type="WEB" AND url NOT LIKE '%#%' GROUP BY url ) SELECT ROUND(SUM(CASE WHEN percent_rank <= 0.01 THEN total_clicks ELSE 0 END) / SUM(total_clicks) * 100, 2) AS percentage_of_clicks FROM PageClicksRanked;

This SQL question is extra advanced as a result of it incorporates superior strategies like window capabilities, conditional aggregation, and customary desk expressions.

Let’s break it down:

Frequent Desk Expression (CTE) – PageClicksRanked

- This a part of the question creates a short lived end result set named PageClicksRanked.

- It calculates the whole variety of clicks for every URL and assigns a percentile rank to every URL based mostly on the whole variety of clicks. The percentile rank is calculated utilizing the PERCENT_RANK() window perform, which assigns a relative rank to every row inside a partition of the end result set.

- Columns chosen:

- url: The URL from which the clicks originated.

- SUM(clicks) AS total_clicks: The overall variety of clicks for every URL.

- PERCENT_RANK() OVER (ORDER BY SUM(clicks) DESC) AS percent_rank: Calculates the percentile rank for every URL based mostly on the whole variety of clicks, ordered in descending order.

Situations

- search_type = ‘WEB’: Filters the info to incorporate solely internet search outcomes.

- AND url NOT LIKE ‘%#%’: Excludes URLs containing “#” from the end result set.

Grouping

- GROUP BY url: Teams the info by URL to calculate the whole clicks for every URL.

Principal Question

- This a part of the question calculates the proportion of complete clicks accounted for by the highest 1% of URLs by way of clicks.

- It sums up the whole clicks for URLs whose percentile rank is lower than or equal to 0.01 (high 1%) and divides it by the whole sum of clicks throughout all URLs. Then, it multiplies the end result by 100 to get the proportion.

Columns chosen

- ROUND(SUM(CASE WHEN percent_rank <= 0.01 THEN total_clicks ELSE 0 END) / SUM(total_clicks) * 100, 2) AS percentage_of_clicks: Calculates the proportion of clicks accounted for by the highest 1% of URLs. The CASE assertion filters out the URLs with a percentile rank lower than or equal to 0.01, after which it sums up the whole clicks for these URLs. Lastly, it divides this sum by the whole sum of clicks throughout all URLs and multiplies it by 100 to get the proportion. The ROUND perform is used to around the end result to 2 decimal locations.

Supply

- FROM PageClicksRanked: Makes use of the PageClicksRanked CTE as the info supply for calculations.

(Narrator voice: for this reason we don’t share extra advanced queries instantly. Writing advanced queries instantly requires information, observe, and understanding of the underlying knowledge and enterprise necessities.)

To be able to write such queries, you want:

- A stable understanding of SQL syntax: SELECT statements, GROUP BY, combination capabilities, subqueries and window capabilities to begin.

- A deep understanding of the database schema which is why we took the time to undergo them in one other article.

- Follow! Writing and optimizing SQL queries does the trick. So does engaged on datasets and fixing analytical issues! Follow means taking an iterative strategy to experiment, check and refine queries.

- Having a superb cookbook: Setting apart good queries you possibly can tweak and depend on.

- Downside-solving expertise: To search out the proper strategy, you will have to have the ability to break down advanced analytical duties into manageable steps. That’s why we began with the five-step framework.

- A efficiency mindset: You wish to enhance question efficiency, particularly for advanced queries working on giant datasets. In case you don’t, you could possibly find yourself spending some huge cash in BigQuery.

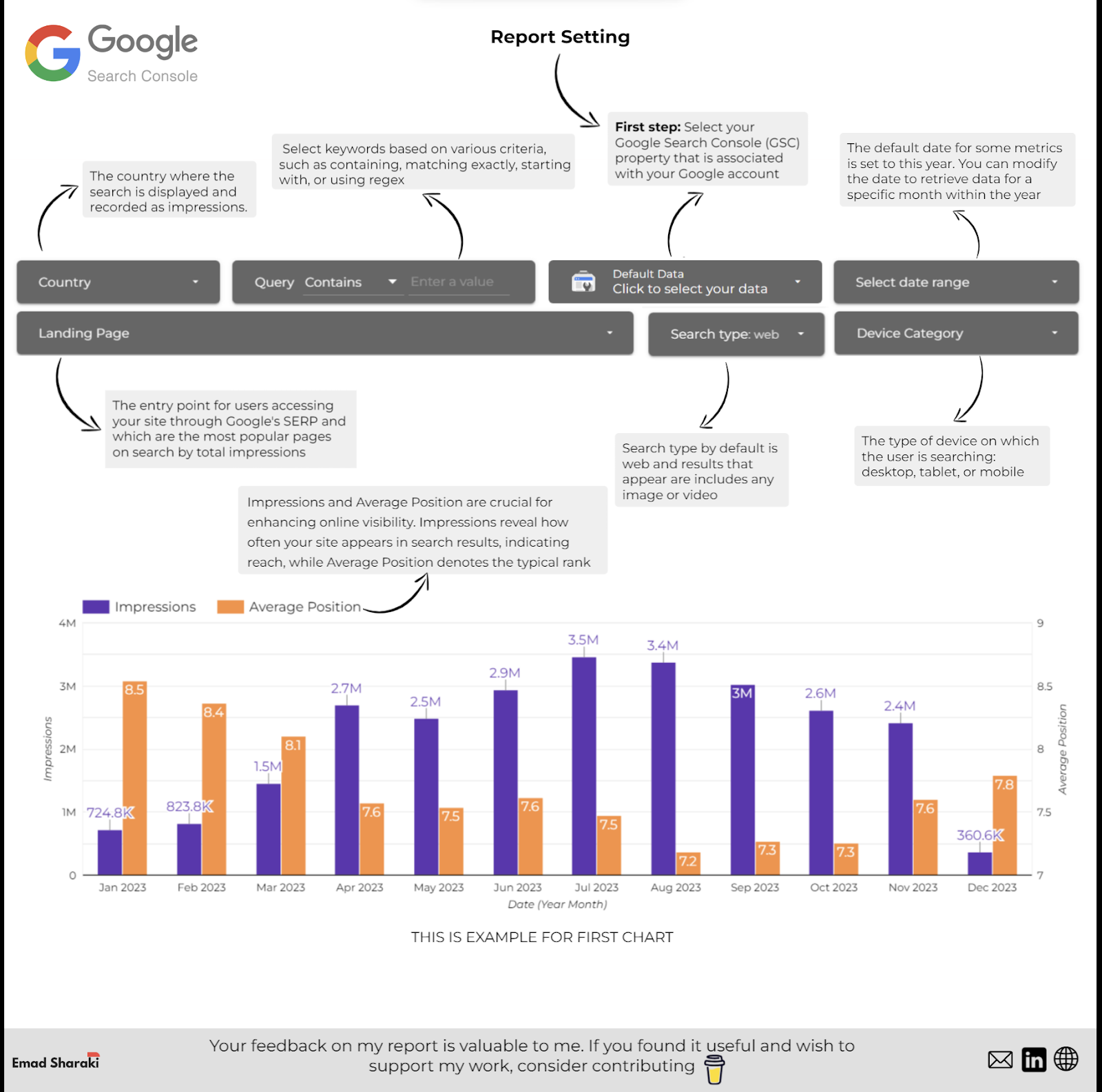

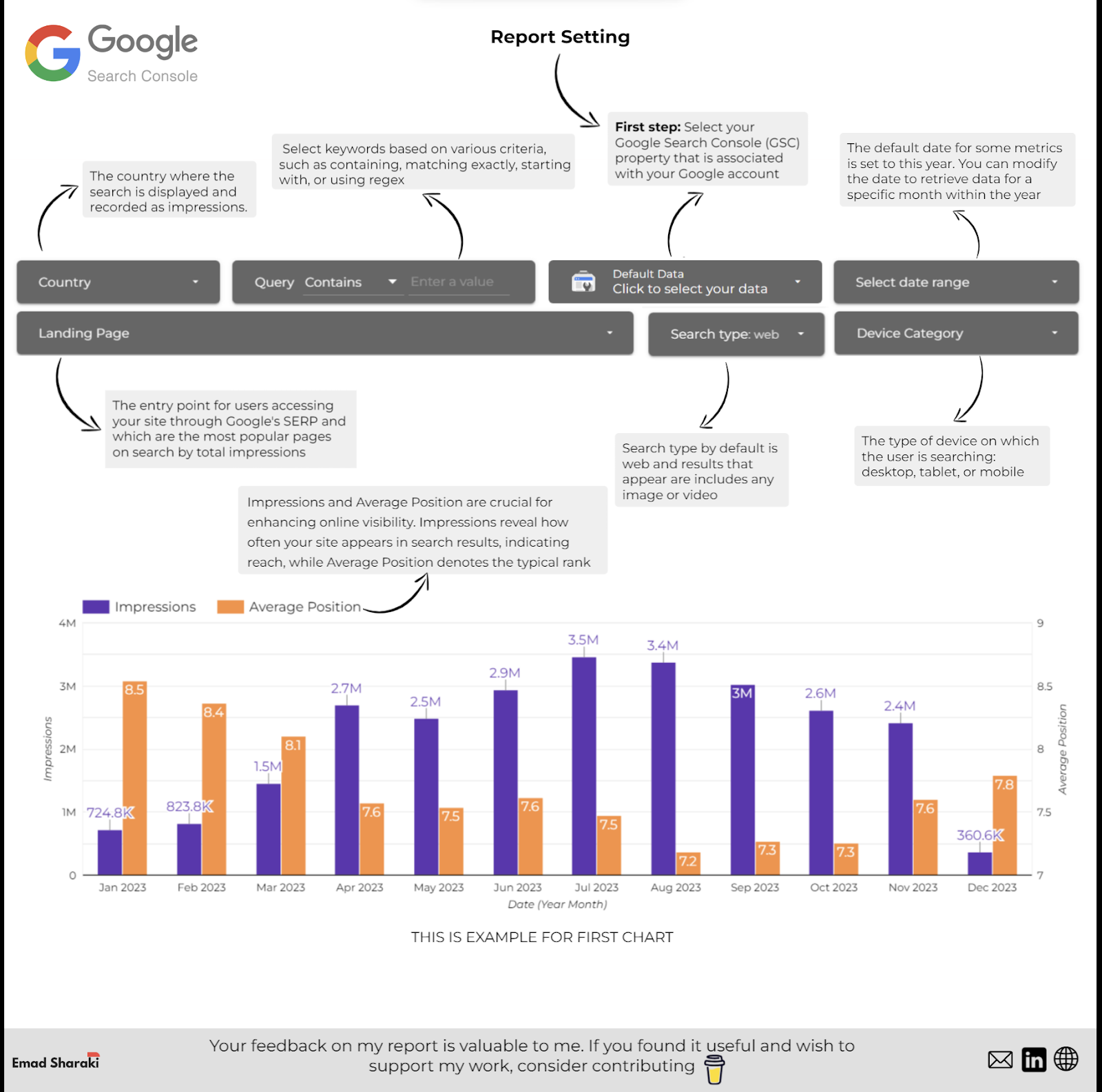

4. Create Looker Studio Dashboards

As soon as that is achieved, you need to use Looker Studio to construct dashboards and visualizations that showcase your content material efficiency metrics.

You possibly can customise these dashboards to current knowledge in a significant manner for various stakeholders and groups. This implies you aren’t the one one accessing the knowledge.

We’ll dive into this portion of the framework in one other article.

Nevertheless, if you wish to get began with a Looker Studio dashboard utilizing BigQuery knowledge, Emad Sharaki shared his superior dashboard. We suggest you give it a strive.

Picture from Emad Sharaki, February 2024

Picture from Emad Sharaki, February 2024

5. Automate Reporting

After getting achieved all this, you possibly can arrange scheduled queries in BigQuery to robotically fetch GSC knowledge current within the tables at common intervals.

This implies you possibly can automate the technology and distribution of experiences inside your organization.

You possibly can try the official documentation for this portion for now. We’ll cowl this at a later date in one other devoted article.

The one tip we are going to share right here is that it is best to schedule queries after the everyday export window to make sure you’re querying the newest accessible knowledge.

To be able to monitor the info freshness, it is best to monitor export completion instances in BigQuery’s export log.

You should utilize the reporting automation to allow different groups in the case of content material creation and optimization. Gianna Brachetti-Truskawa, website positioning PM and strategist, helps editorial groups by integrating experiences straight into the CMS.

This implies editors can filter current articles by efficiency and prioritize their optimization efforts accordingly. One other automation reporting factor to think about is to combine with Jira to attach your efficiency to a dashboard with customized guidelines.

Because of this articles will be pulled to the highest of the backlog and that seasonal matters will be added to the backlog in a well timed method to create momentum.

Going Additional

Clearly, you have to extra use instances and a deeper understanding of the kind of content material audit you wish to conduct.

Nevertheless, the framework we shared on this article is an effective way to make sure issues keep structured. If you wish to take it additional, Lazarina Stoy, website positioning knowledge skilled, has just a few ideas for you:

“When doing content material efficiency evaluation, it’s necessary to know that not all content material is created equal. Make the most of SQL Case/When statements to create subsets of the content material based mostly on web page sort (firm web page, weblog submit, case examine, and many others.), content material construction patterns (idea explainer, information merchandise, tutorial, information, and many others), title patterns, goal intent, goal audiences, content material clusters, and every other sort of classification that’s distinctive to your content material.

That manner you possibly can monitor and troubleshoot in case you detect patterns which are underperforming, in addition to amplify the efforts which are paying off, each time such are detected.”

In case you create queries based mostly on these issues, share them with us so we will add them to the cookbook of queries one can use for content material efficiency evaluation!

Conclusion

By following this structured strategy, you possibly can successfully leverage BigQuery and GSC knowledge to research and optimize your content material efficiency whereas automating reporting to maintain stakeholders knowledgeable.

Keep in mind, accumulating everybody else’s queries won’t make you an in a single day BigQuery professional. Your worth lies in determining use instances.

After that, you possibly can determine the metrics you want and tweak the queries others created or write your personal. After getting that within the bag, it’s time to be knowledgeable by permitting others to make use of the dashboard you created to visualise your findings.

Your peace of thoughts will come when you automate a few of these actions and develop your expertise and queries much more!

Extra sources:

Featured Picture: Suvit Topaiboon/Shutterstock