The brand new oil isn’t information or consideration. It’s phrases. The differentiator to construct next-gen AI fashions is entry to content material when normalizing for computing energy, storage, and power.

However the internet is already getting too small to satiate the starvation for brand spanking new fashions.

Some executives and researchers say the business’s want for high-quality textual content information may outstrip provide inside two years, doubtlessly slowing AI’s growth.

Even fine-tuning doesn’t appear to work in addition to merely constructing extra highly effective fashions. A Microsoft analysis case examine exhibits that efficient prompts can outperform a fine-tuned mannequin by 27%.

We have been questioning if the longer term will include many small, fine-tuned, or just a few huge, all-encompassing fashions. It appears to be the latter.

There isn’t any AI technique with no information technique.

Hungry for extra high-quality content material to develop the following era of huge language fashions (LLMs), mannequin builders begin to pay for pure content material and revive their efforts to label artificial information.

For content material creators of any variety, this new stream of cash may carve the trail to a brand new content material monetization mannequin that incentivizes high quality and makes the net higher.

Enhance your abilities with Progress Memo’s weekly knowledgeable insights. Subscribe free of charge!

KYC: AI

If content material is the brand new oil, social networks are oil rigs. Google invested $60 million a 12 months in utilizing Reddit content material to coach its fashions and floor Reddit solutions on the high of search. Pennies, in the event you ask me.

YouTube CEO Neal Mohan lately despatched a transparent message to OpenAI and different mannequin builders that coaching on YouTube is a no-go, defending the corporate’s huge oil reserves.

The New York Occasions, which is at the moment working a lawsuit towards OpenAI, printed an article stating that OpenAI developed Whisper to coach fashions on YouTube transcripts, and Google makes use of content material from all of its platforms, like Google Docs and Maps evaluations, to coach its AI fashions.

Generative AI information suppliers like Appen or Scale AI are recruiting (human) writers to create content material for LLM mannequin coaching.

Make no mistake, writers aren’t getting wealthy writing for AI.

For $25 to $50 per hour, writers carry out duties like rating AI responses, writing brief tales, and fact-checking.

Candidates will need to have a Ph.D. or grasp’s diploma or are at the moment attending faculty. Knowledge suppliers are clearly in search of consultants and “good” writers. However the early indicators are promising: Writing for AI could possibly be monetizable.

Picture Credit score: Kevin Indig

Picture Credit score: Kevin Indig Picture Credit score: Kevin Indig

Picture Credit score: Kevin IndigMannequin builders search for good content material in each nook of the net, and a few are completely happy to promote it.

Content material platforms like Photobucket promote pictures for 5 cents to at least one greenback a chunk. Brief-form movies can get $2 to $4; longer movies value $100 to $300 per hour of footage.

With billions of pictures, the corporate struck oil in its yard. Which CEO can stand up to such a temptation, particularly as content material monetization is getting tougher and tougher?

From Free Content material:

Publishers are getting squeezed from a number of sides:

- Few are ready for the dying of third-party cookies.

- Social networks ship much less site visitors (Meta) or deteriorate in high quality (X).

- Most younger individuals get information from TikTok.

- SGE looms on the horizon.

Satirically, labeling AI content material higher would possibly assist LLM growth as a result of it’s simpler to separate pure from artificial content material.

In that sense, it’s within the curiosity of LLM builders to label AI content material to allow them to exclude it from coaching or use it the suitable means.

Labeling

Drilling for phrases to coach LLMs is only one facet of creating next-gen AI fashions. The opposite one is labeling. Mannequin builders want labeling to keep away from mannequin collapse, and society wants it as a defend towards faux information.

A brand new motion of AI labeling is rising regardless of OpenAI dropping watermarking on account of low accuracy (26%). As a substitute of labeling content material themselves, which appears futile, huge tech (Google, YouTube, Meta, and TikTok) pushes customers to label AI content material with a carrot/stick strategy.

Google makes use of a double-pronged strategy to combat AI spam in search: prominently exhibiting boards like Reddit, the place content material is almost certainly created by people, and penalties.

From AIfficiency:

Google is surfacing extra content material from boards within the SERPs is to counter-balance AI content material. Verification is the last word AI watermarking. Though Reddit can’t stop people from utilizing AI to create posts or feedback, chances are high decrease due to two issues Google search doesn’t have: Moderation and Karma.

Sure, Content material Goblins have already taken intention at Reddit, however a lot of the 73 million every day lively customers present helpful solutions.1 Content material moderators punish spam with bans and even kicks. However probably the most highly effective driver of high quality on Reddit is Karma, “a person’s popularity rating that displays their neighborhood contributions.” By way of easy up or downvotes, customers can acquire authority and trustworthiness, two integral elements in Google’s high quality methods.

Google lately clarified that it expects retailers to not take away AI metadata from photos utilizing the IPTC metadata protocol.

When a picture has a tag like compositeSynthetic, Google would possibly label it as “AI-generated” wherever, not simply in purchasing. The punishment for eradicating AI metadata is unclear, however I think about it like a hyperlink penalty.

IPTC is similar format Meta makes use of for Instagram, Fb, and WhatsApp. Each firms give IPTC metatags to any content material popping out from their very own LLMs. The extra AI instrument makers observe the identical pointers to mark and tag AI content material, the extra dependable detection methods work.

When photorealistic photos are created utilizing our Meta AI function, we do a number of issues to ensure individuals know AI is concerned, together with placing seen markers that you would be able to see on the pictures, and each invisible watermarks and metadata embedded inside picture information. Utilizing each invisible watermarking and metadata on this means improves each the robustness of those invisible markers and helps different platforms determine them.

The downsides of AI content material are small when the content material seems to be like AI. However when AI content material seems to be actual, we want labels.

Whereas advertisers attempt to get away from the AI look, content material platforms favor it as a result of it’s simple to acknowledge.

For business artists and advertisers, generative AI has the ability to massively pace up the inventive course of and ship customized adverts to prospects on a big scale – one thing of a holy grail within the advertising world. However there’s a catch: Many photos AI fashions generate function cartoonish smoothness, telltale flaws, or each.

Shoppers are already turning towards “the AI look,” a lot in order that an uncanny and cinematic Tremendous Bowl advert for Christian charity He Will get Us was accused of being born from AI –though a photographer created its photos.

YouTube began imposing new pointers for video creators that say realistic-looking AI content material must be labeled.

Challenges posed by generative AI have been an ongoing space of focus for YouTube, however we all know AI introduces new dangers that dangerous actors might attempt to exploit throughout an election. AI can be utilized to generate content material that has the potential to mislead viewers – significantly in the event that they’re unaware that the video has been altered or is synthetically created. To higher deal with this concern and inform viewers when the content material they’re watching is altered or artificial, we’ll begin to introduce the next updates:

- Creator Disclosure: Creators will probably be required to reveal after they’ve created altered or artificial content material that’s lifelike, together with utilizing AI instruments. This may embody election content material.

- Labeling: We’ll label lifelike altered or artificial election content material that doesn’t violate our insurance policies, to obviously point out for viewers that among the content material was altered or artificial. For elections, this label will probably be displayed in each the video participant and the video description, and can floor whatever the creator, political viewpoints, or language.

The largest imminent worry is faux AI content material that might affect the 2024 U.S. presidential election.

No platform desires to be the Fb of 2016, which noticed lasting reputational harm that impacted its inventory worth.

Chinese language and Russian state actors have already experimented with faux AI information and tried to meddle with the Taiwanese and coming U.S. elections.

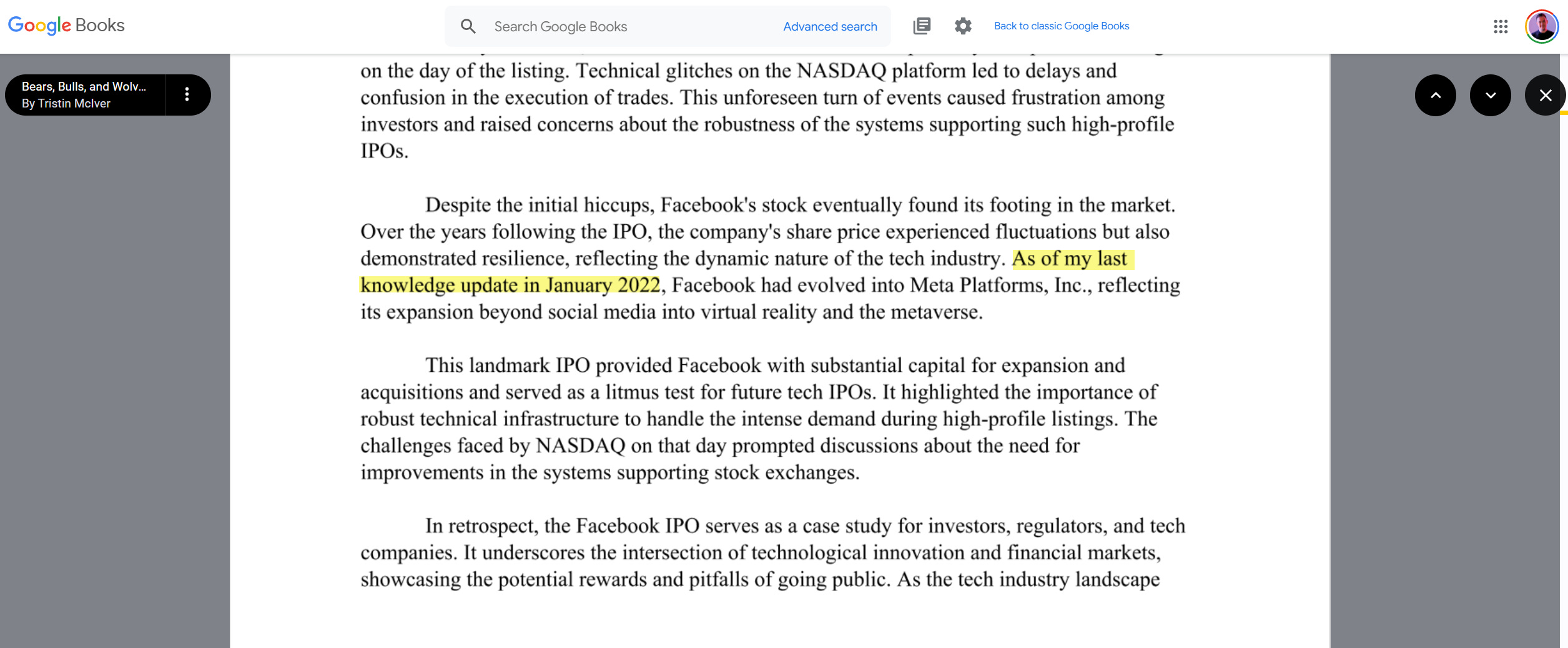

Now that OpenAI is near releasing Sora, which creates hyperrealistic movies from prompts, it’s not a far bounce to think about how AI movies may cause issues with out strict labeling. The state of affairs is hard to get below management. Google Books already options books that have been clearly written with or by ChatGPT.

Picture Credit score: Kevin Indig

Picture Credit score: Kevin IndigTakeaway

Labels, whether or not psychological or visible, affect our choices. They annotate the world for us and have the ability to create or destroy belief. Like class heuristics in purchasing, labels simplify our decision-making and knowledge filtering.

From Messy Center:

Lastly, the concept of class heuristics, numbers prospects give attention to to simplify decision-making, like megapixels for cameras, gives a path to specify person habits optimization. An ecommerce retailer promoting cameras, for instance, ought to optimize their product playing cards to prioritize class heuristics visually. Granted, you first want to achieve an understanding of the heuristics in your classes, and so they would possibly range primarily based on the product you promote. I assume that’s what it takes to achieve success in website positioning nowadays.

Quickly, labels will inform us when content material is written by AI or not. In a public survey of 23,000 respondents, Meta discovered that 82% of individuals need labels on AI content material. Whether or not frequent requirements and punishments work stays to be seen, however the urgency is there.

There may be additionally a chance right here: Labels may shine a highlight on human writers and make their content material extra helpful, relying on how good AI content material turns into.

On high, writing for AI could possibly be one other strategy to monetize content material. Whereas present hourly charges don’t make anybody wealthy, mannequin coaching provides new worth to content material. Content material platforms may discover new income streams.

Internet content material has change into extraordinarily commercialized, however AI licensing may incentivize writers to create good content material once more and untie themselves from affiliate or promoting revenue.

Generally, the distinction makes worth seen. Possibly AI could make the net higher in spite of everything.

For Knowledge-Guzzling AI Firms, the Web Is Too Small

The Energy Of Prompting

Inside Large Tech’s Underground Race To Purchase AI Coaching Knowledge

OpenAI Provides Up On Detection Device For AI-Generated Textual content

IPTC Picture Metadata

Labeling AI-Generated Pictures on Fb, Instagram and Threads

How The Advert Business Is Making AI Pictures Look Much less Like AI

How We’re Serving to Creators Disclose Altered Or Artificial Content material

Addressing AI-Generated Election Misinformation

China Is Concentrating on U.S. Voters And Taiwan With AI-Powered Disinformation

Google Books Is Indexing AI-Generated Rubbish

Our Method To Labeling AI-Generated Content material And Manipulated Media

Featured Picture: Paulo Bobita/Search Engine Journal