TL;DR

- Search Console has some fairly extreme limitations relating to storage, anonymized and incomplete knowledge, and API limits.

- You may bypass quite a lot of these limitations and make GSC work a lot more durable for you however establishing way more properties at a subfolder stage.

- You may have as much as 1,000 properties in your Search Console account. Don’t cease with one domain-level property.

- All of this permits for much richer indexation, question, and page-level evaluation. All without spending a dime. Notably in the event you make use of the two,000 per property API URL indexing cap.

Now, that is primarily relevant to enterprise websites. Websites with a deep subfolder construction and a wealthy historical past of publishing quite a lot of content material. Technically, this isn’t publisher-specific. Should you work for an ecommerce model, this needs to be extremely helpful, too.

I and it love all massive and clunky websites equally.

What Is A Search Console Property?

A Search Console Property is a site, subfolder, or subdomain variation of a web site you possibly can show that you simply personal.

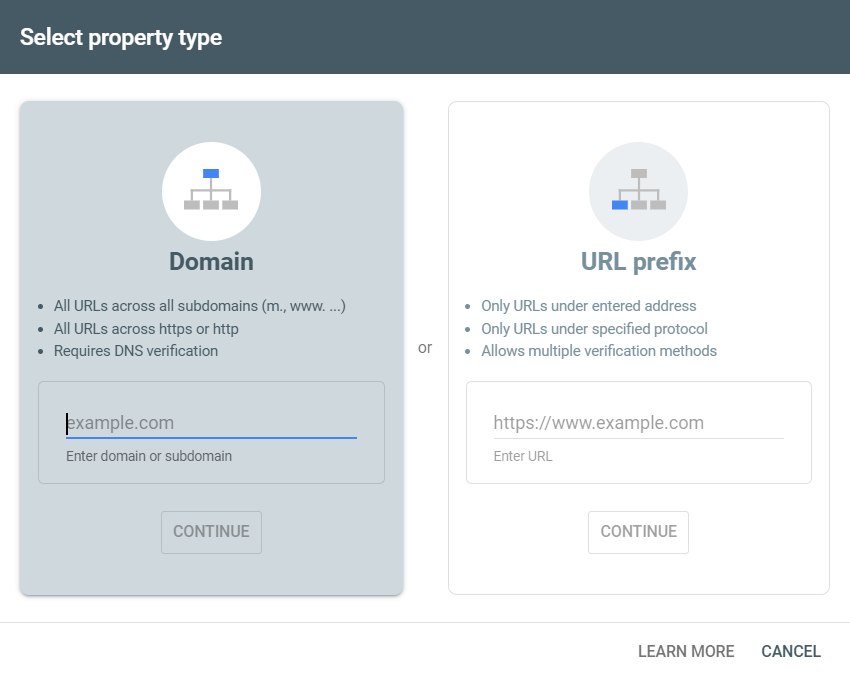

You may arrange domain-level or URL-prefix-level properties (Picture Credit score: Harry Clarkson-Bennett)

You may arrange domain-level or URL-prefix-level properties (Picture Credit score: Harry Clarkson-Bennett)Should you simply arrange a domain-level property, you continue to get entry to all the good things GSC presents. Click on and impression knowledge, indexation evaluation, and the crawl stats report (solely obtainable in domain-level properties), to call just a few. However you’re hampered by some fairly extreme limitations:

- 1,000 rows of question and page-level knowledge.

- 2,000 URL API restrict for indexation stage evaluation every day.

- Sampled key phrase knowledge (and privateness masking).

- Lacking knowledge (in some circumstances, 70% or extra).

- 16 months of knowledge.

Whereas the 16-month restrict and sampled key phrase knowledge require you to export your knowledge to BigQuery (or use one of many instruments beneath), you possibly can massively enhance your GSC expertise by making higher use of properties.

There are a variety of verification strategies obtainable – DNS verification, HTML tag or file add, Google Analytics monitoring code. After you have arrange and verified a domain-level property, you’re free so as to add any child-level property. Subdomains or subfolders alike.

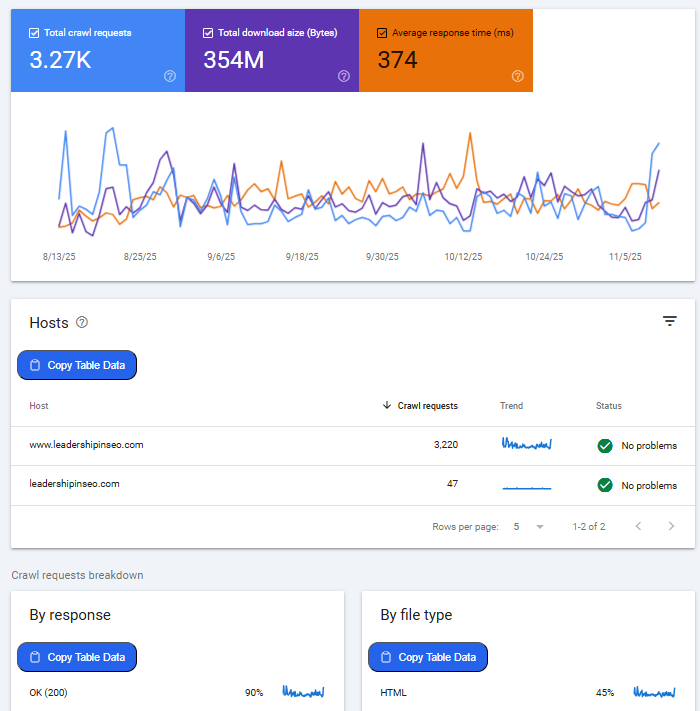

The crawl stats report might be an absolute goldmine, significantly for big websites (not this one!) (Picture Credit score: Harry Clarkson-Bennett)

The crawl stats report might be an absolute goldmine, significantly for big websites (not this one!) (Picture Credit score: Harry Clarkson-Bennett)The crawl stats report might be extraordinarily helpful for debugging points like spikes in parameter URLs or from naughty subdomains. Notably on massive websites the place departments do belongings you and I don’t discover out about till it’s too late.

However by breaking down adjustments at a number, file sort, and response code stage, you possibly can cease issues on the supply. Simply establish points affecting your crawl funds earlier than you need to hit somebody over the pinnacle with their method to inner linking and parameter URLs.

Normally, anyway. Typically individuals simply want a very good clump. Metaphorically talking, after all.

Subdomains are normally seen as separate entities with their very own crawl funds. Nevertheless, this isn’t at all times the case. In keeping with John Mueller, it’s doable that Google might group your subdomains collectively for crawl funds functions.

In keeping with Gary Illyes, crawl funds is usually set by host title. So subdomains ought to have their very own crawl funds if the host title is separate from the principle area.

How Can I Determine The Proper Properties?

As an search engine marketing, it’s your job to know the web site higher than anyone else. Generally, that isn’t too onerous since you work with digital ignoramuses. Normally, you possibly can simply discover this knowledge in GSC. However bigger websites want slightly extra love.

Crawl your website utilizing Screaming Frog, Sitebulb, the artist previously often called Deepcrawl, and construct out an image of your website construction in the event you don’t already know. Add probably the most useful properties first (income first, site visitors second) and work from there.

Some Options To GSC

Earlier than going any additional, it will be remiss of me to not point out some wonderful options to GSC. Options that utterly take away these limitations for you.

search engine marketing Stack

search engine marketing Stack is a improbable software that removes all question limits, has an in-built MCP-style setup the place you possibly can actually discuss to your knowledge. For instance, present me content material that has at all times carried out properly in September or establish pages with a well being question counting profile.

Daniel has been very vocal about question counting, and it’s a improbable solution to perceive the route of journey your website or content material is taking in search. Going up within the high 3 or 10 positions – good. Happening there and up additional down – dangerous.

search engine marketing Will get

search engine marketing Will get is a extra budget-friendly various to search engine marketing Stack (which in itself isn’t that costly). search engine marketing Will get additionally removes the usual row limitations related to Search Console and makes content material evaluation rather more environment friendly.

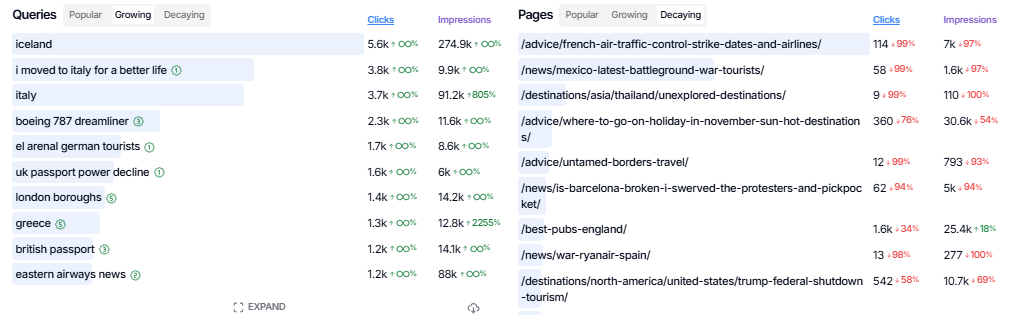

Rising and decaying pages and queries in search engine marketing Will get are tremendous helpful (Picture Credit score: Harry Clarkson-Bennett)

Rising and decaying pages and queries in search engine marketing Will get are tremendous helpful (Picture Credit score: Harry Clarkson-Bennett)Create key phrase and web page teams for question counting and click on and impression evaluation at a content material cluster stage. search engine marketing Will get has arguably one of the best free model of any software available on the market.

Indexing Perception

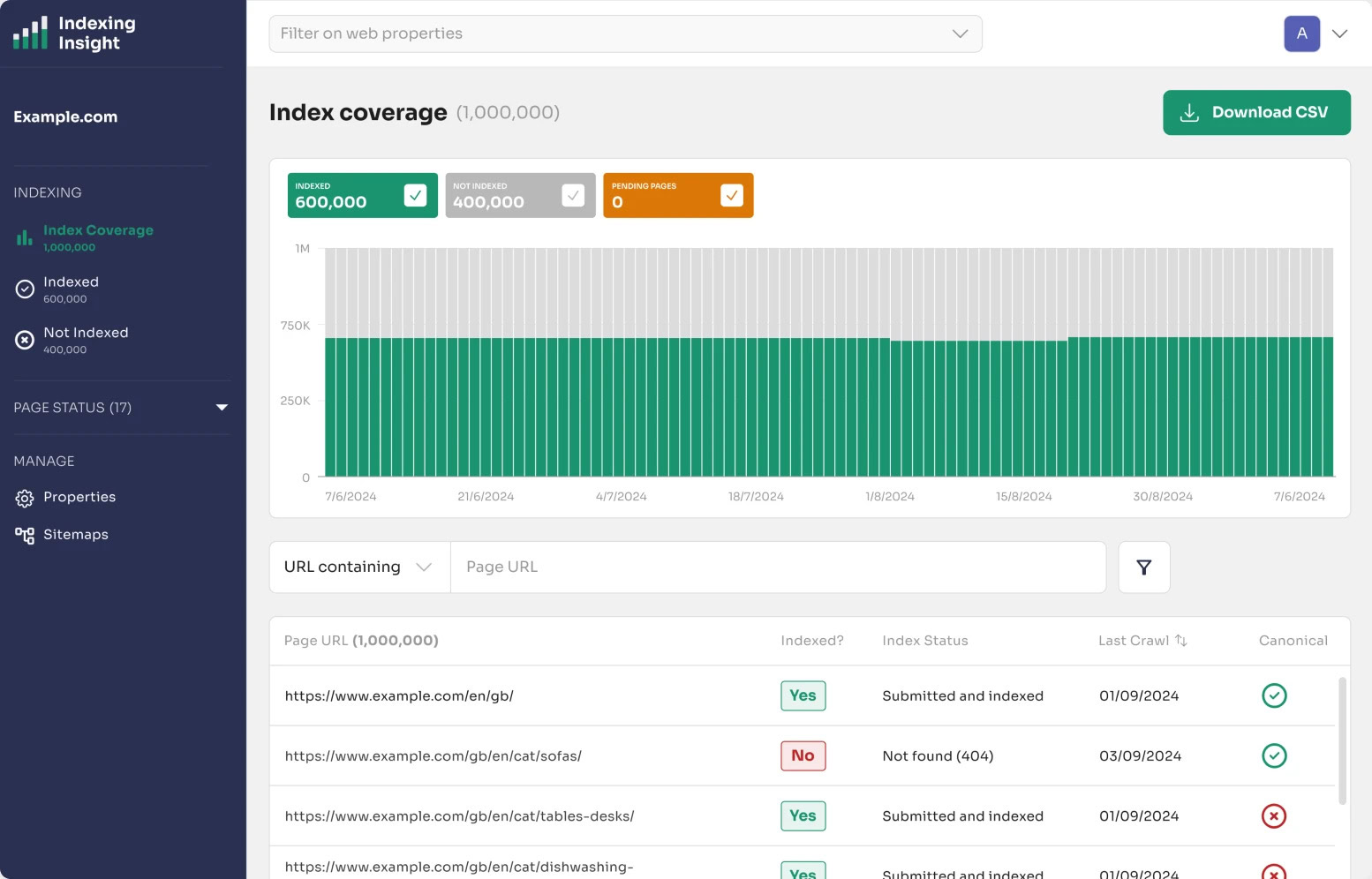

Indexing Perception – Adam Gent’s ultra-detailed indexation evaluation software – is a lifesaver for big, sprawling web sites. 2,000 URLs per day simply doesn’t minimize the mustard for enterprise websites. However by cleverly taking the multi-property method, you possibly can leverage 2,000 URLs per property.

With some wonderful visualizations and datapoints (do you know if a URL hasn’t been crawled for 130 days, it drops out of the index?), you want an answer like this. Notably on legacy and enterprise websites.

Take away the indexation limits of two,000 URLs per day with the API and the 1,000 row URL restrict (Picture Credit score: Harry Clarkson-Bennett)

Take away the indexation limits of two,000 URLs per day with the API and the 1,000 row URL restrict (Picture Credit score: Harry Clarkson-Bennett)All of those instruments immediately enhance your Search Console expertise.

Advantages Of A Multi-Property Strategy

Arguably, the best method of getting round among the aforementioned points is to scale the variety of properties you personal. For 2 most important causes – it’s free and it will get round core API limitations.

Everybody likes free stuff. I as soon as walked previous a newsagent doing a gap day promotion the place they had been freely giving tins of chopped tomatoes. Which was weird. What was more odd was that there was a queue. A queue I ended up becoming a member of.

Spaghetti Bolognese has by no means tasted so candy.

Granular Indexation Monitoring

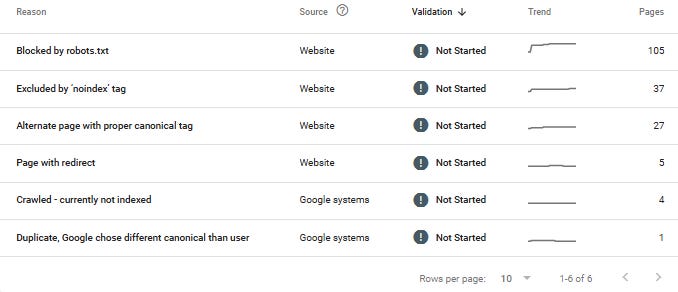

Arguably, considered one of Search Console’s finest however most limiting options is its indexation evaluation. Understanding the variations between Crawled – At present Not Listed and Found – At present Not Listed can assist you make good choices that enhance the effectivity of your website. Considerably bettering your crawl funds and inner linking methods.

Picture Credit score: Harry Clarkson-Bennett

Picture Credit score: Harry Clarkson-BennettPages that sit within the Crawled – At present Not Listed pipeline might not require any speedy motion. The web page has been crawled, however hasn’t been deemed match for Google’s index. This might signify web page high quality points, so value guaranteeing your content material is including worth and your inner linking prioritizes vital pages.

Found – At present Not Listed is barely completely different. It implies that Google has discovered the URL, however hasn’t but crawled it. It might be that your content material output isn’t fairly on par with Google’s perceived worth of your website. Or that your inner linking construction wants to higher prioritize vital content material. Or some type of server of technical difficulty.

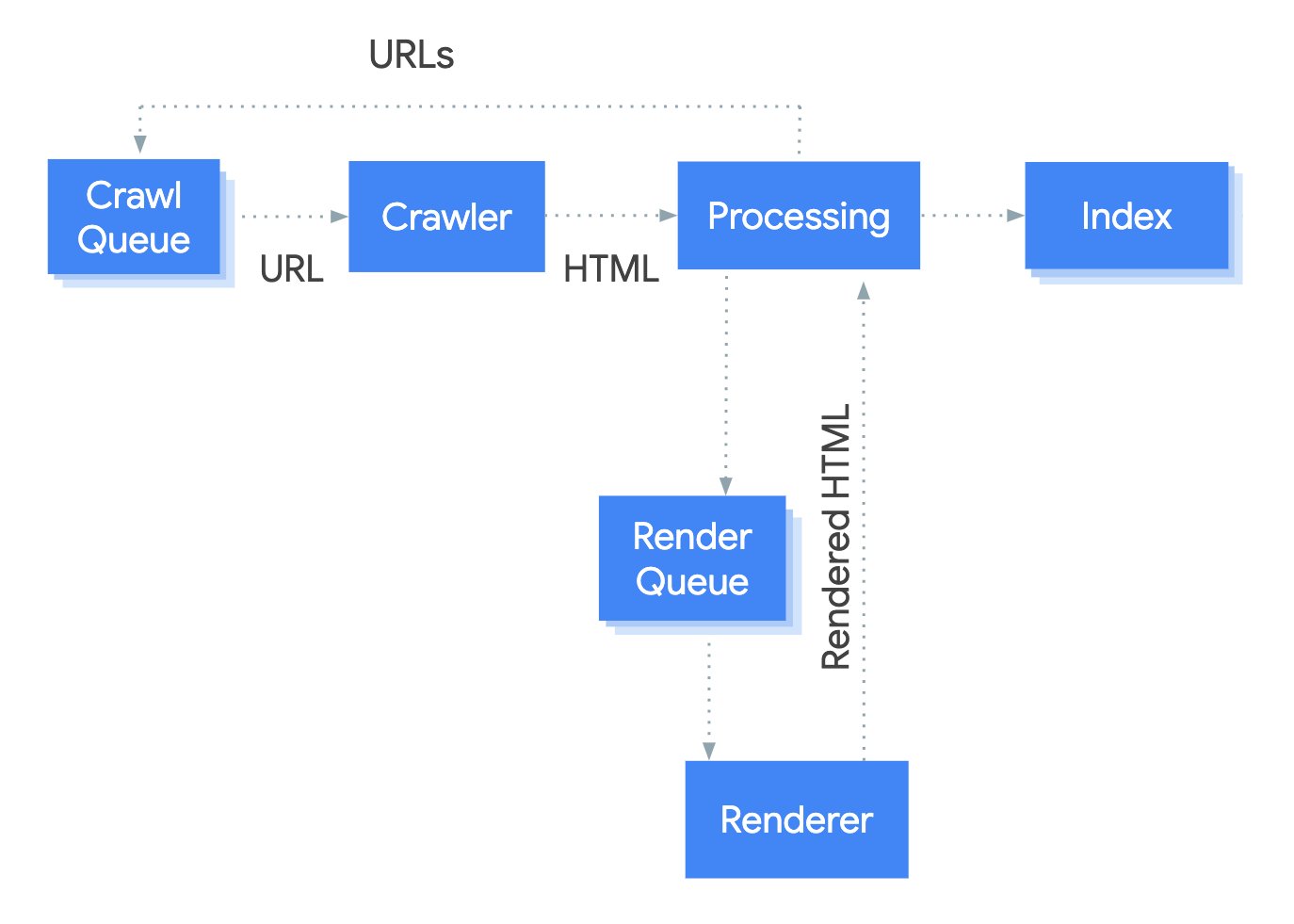

All of this requires a minimum of a rudimentary understanding of how Google’s indexation pipeline works. It isn’t a binary method. Gary Illyes stated Google has a tiered system of indexation. Content material that must be served extra incessantly is saved in a better-quality, dearer system. Much less useful content material is saved in a inexpensive system.

How Google crawling and rendering system works (Picture Credit score: Harry Clarkson-Bennett)

How Google crawling and rendering system works (Picture Credit score: Harry Clarkson-Bennett)Much less monkey see, monkey do; extra monkey see, monkey make resolution primarily based on the location’s worth, crawl funds, effectivity, server load, and use of JavaScript.

The tiered method to indexation prioritizes the perceived worth and uncooked HTML of a web page. JavaScript is queued as a result of it’s so rather more resource-intensive. Therefore why SEOs bang on about having your content material rendered on the server aspect.

Adam has an excellent information to the varieties of not listed pages in GSC and what they imply right here.

Price noting the web page indexation software isn’t utterly updated. I consider it’s up to date a few instances per week. However I can’t keep in mind the place I received that data, so don’t maintain me to that…

Should you’re a giant information writer you’ll see plenty of your newsier content material within the Crawled – At present Not Listed class. However once you examine the URL (which you completely ought to do) it is perhaps listed. There’s a delay.

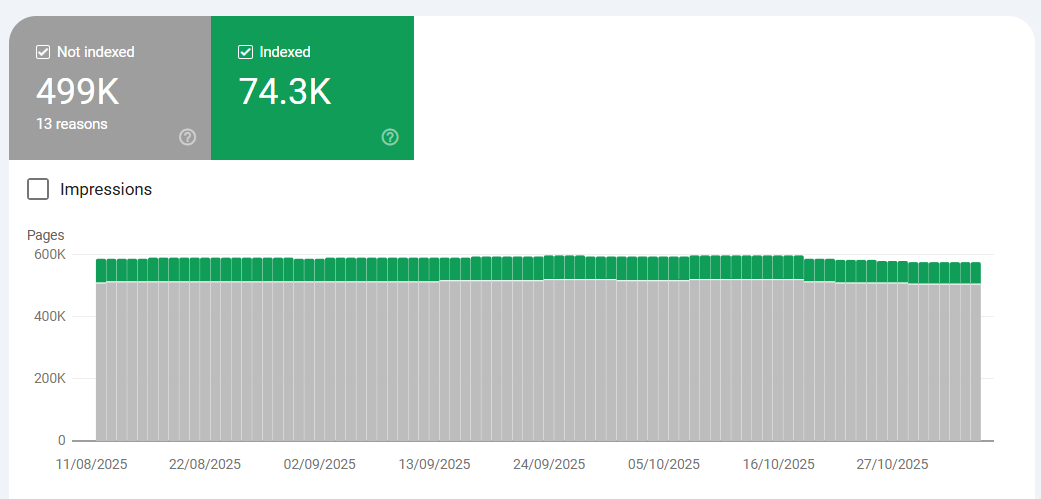

Indexing API Scalability

If you begin engaged on bigger web sites – and I’m speaking about web sites the place subfolders have properly over 500,000 pages – the API’s 2,000 URL limitation turns into obvious. You simply can not successfully establish pages that drop out and in of the “Why Pages Aren’t Listed?” part.

Not nice, have seen worse (Picture Credit score: Harry Clarkson-Bennett)

Not nice, have seen worse (Picture Credit score: Harry Clarkson-Bennett)However once you arrange a number of properties, you possibly can scale successfully.

The two,000 restrict solely applies at a property stage. So in the event you arrange a domain-level property alongside 20 different properties (on the subfolder stage), you possibly can leverage as much as 42,000 URLs per day. The extra you do, the higher.

And the API does have another advantages:

Nevertheless it doesn’t assure indexing. It’s a request, not a command.

To set it up, it is advisable allow the API in Google Cloud Console. You may observe this semi-helpful quickstart information. It isn’t enjoyable. It’s a ache within the arse. However it’s value it. Then you definately’ll want a Python script to ship API requests and to observe API quotas and responses (2xx, 3xx, 4xx, and so forth.).

If you wish to get fancy, you possibly can mix it together with your publishing knowledge to determine precisely how lengthy pages in particular sections take to get listed. And it is best to at all times need to get fancy.

It is a actually good sign as to what your most vital subfolders are in Google’s eyes, too. Performant vs. under-performing classes.

Granular Click on And Impression Information

A vital for big websites. Not solely does the default Search Console solely retailer 1,000 rows or question and URL knowledge, but it surely solely shops it for 16 months. Whereas that seems like a very long time, quick ahead a 12 months or two, and you’ll want you had began storing the information in BigQuery.

Notably relating to YoY click on conduct and occasion planning. The tooth grinding alone pays on your dentist’s annual journey to Aruba.

However by far and away the best solution to see search knowledge at a extra granular stage is to create extra GSC properties. When you nonetheless have the identical question and URL limits, as a result of you’ve got a number of properties as a substitute of 1, the information limits turn out to be far much less limiting.

What About Sitemaps?

In a roundabout way associated to GSC indexation, however some extent of observe. Sitemaps usually are not a very sturdy software in your arsenal relating to encouraging indexing of content material. The indexation of content material is pushed by how “useful” it’s to customers.

Now, it will be remiss of me to not spotlight that information sitemaps are barely completely different. When pace to publish and indexation are so vital, you need to spotlight your freshest articles in a ratified place.

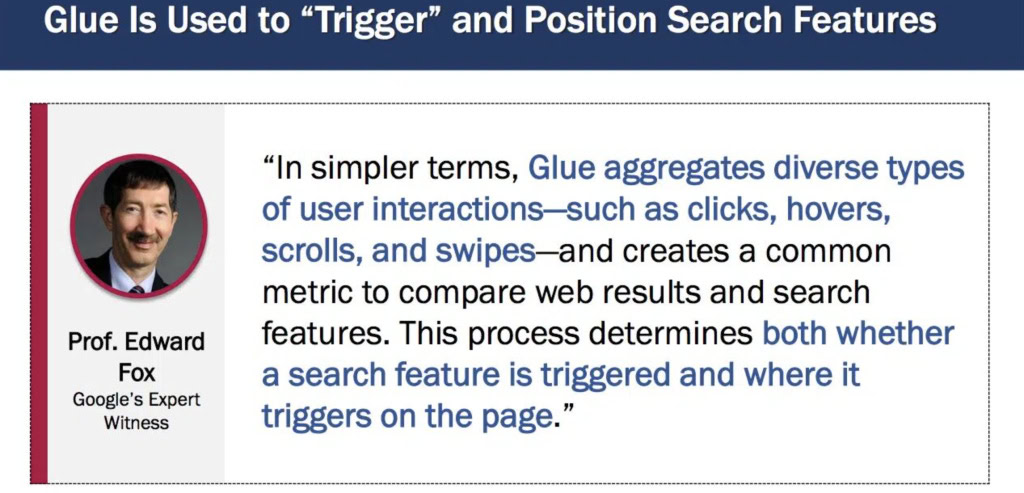

In the end, it comes all the way down to Navboost. Good vs. dangerous clicks and the final longest click on. Or in additional of a information sense, Glue – an enormous desk of consumer interactions, designed to rank recent content material in real-time and hold the index dynamic. Indexation is pushed by your content material being useful sufficient to customers for Google to proceed to retailer in its index.

Glue emphasizes speedy interplay alerts like hovers and swipes for extra on the spot suggestions (Picture Credit score: Harry Clarkson-Bennett)

Glue emphasizes speedy interplay alerts like hovers and swipes for extra on the spot suggestions (Picture Credit score: Harry Clarkson-Bennett)Due to a long time of expertise (and affirmation through the DoJ trial and the Google Leak), we all know that your website’s authority (Q*), impression over time, and inner linking construction all play a key position. However as soon as it’s listed, it’s all about consumer engagement. Sitemap or no sitemap, you possibly can’t pressure individuals to like your beige, depressing content material.

And Sitemap Indexes?

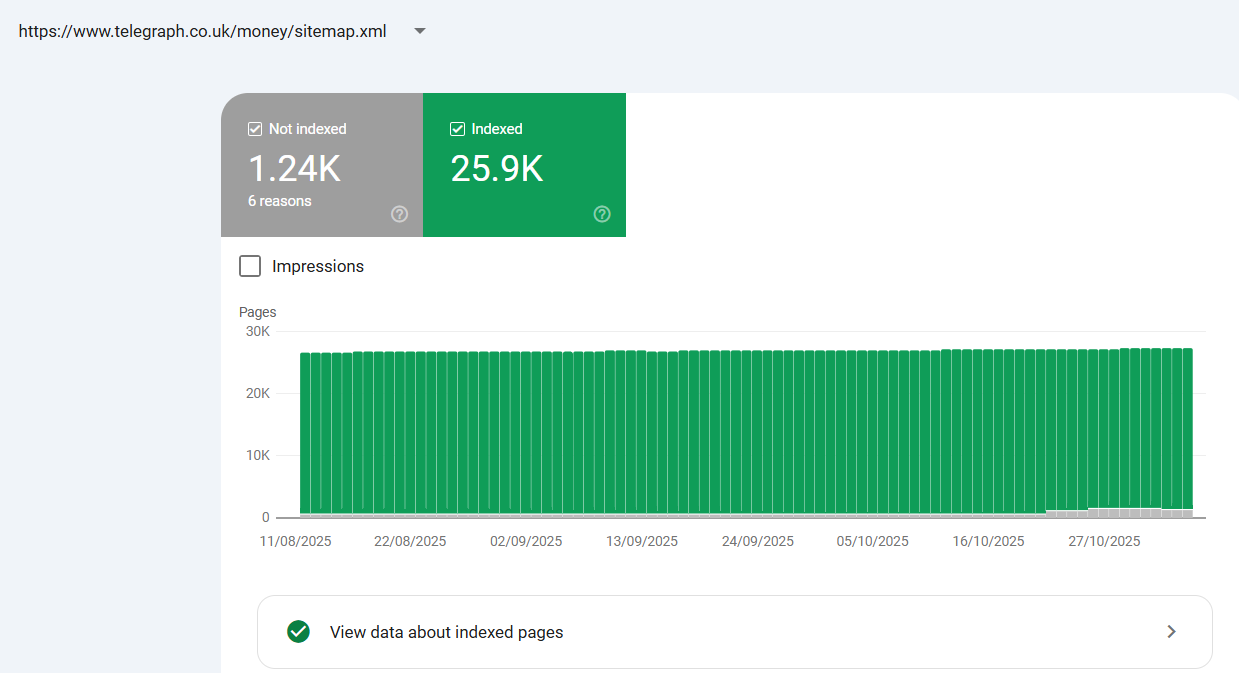

Most bigger websites use sitemap indexes. Basically, a sitemap of sitemaps to handle bigger web sites that exceed the 50,000 row restrict. If you add the sitemap index to Search Console, don’t cease there. Add each particular person sitemap in your sitemap index.

This offers you entry to indexation at a sitemap stage within the web page indexing or sitemaps report. One thing that’s a lot more durable to handle when you’ve got hundreds of thousands of pages in a sitemap index.

Seeing knowledge at a sitemap stage provides extra granular indexation knowledge in GSC (Picture Credit score: Harry Clarkson-Bennett)

Seeing knowledge at a sitemap stage provides extra granular indexation knowledge in GSC (Picture Credit score: Harry Clarkson-Bennett)Take the identical method with sitemaps as we’ve got mentioned with properties. Extra is mostly higher.

Price understanding that every doc can be given a DocID. The DocID shops alerts to attain the web page’s recognition: consumer clicks, its high quality and authoritativeness, crawl knowledge, and a spam rating amongst others.

Something categorized as essential to rating a web page is saved and used for indexation and rating functions.

What Ought to I Do Subsequent?

- Assess your present GSC setup – is it working onerous sufficient for you?

- Do you’ve got entry to a domain-level property and a crawl stats report?

- Have you ever already damaged your website down into “properties” in GSC?

- If not, crawl your website and set up the subfolders you need to add.

- Evaluate your sitemap setup. Do you simply have a sitemap index? Have you ever added the person sitemaps to GSC, too?

- Take into account connecting your knowledge to BigQuery and storing greater than 16 months of it.

- Take into account connecting to the API through Google Cloud Console.

- Evaluate the above instruments and see in the event that they’d add worth.

In the end, Search Console could be very helpful. Nevertheless it has important limitations, and to be honest, it’s free. Different instruments have surpassed it in some ways. But when nothing else, it is best to make it work as onerous as doable.r

Extra Assets:

This put up was initially printed on Management in search engine marketing.

Featured Picture: N Universe/Shutterstock